Abstract

In recent years, there has been a great deal of concern about the proliferation of false and misleading news on social media1,2,3,4. Academics and practitioners alike have asked why people share such misinformation, and sought solutions to reduce the sharing of misinformation5,6,7. Here, we attempt to address both of these questions. First, we find that the veracity of headlines has little effect on sharing intentions, despite having a large effect on judgments of accuracy. This dissociation suggests that sharing does not necessarily indicate belief. Nonetheless, most participants say it is important to share only accurate news. To shed light on this apparent contradiction, we carried out four survey experiments and a field experiment on Twitter; the results show that subtly shifting attention to accuracy increases the quality of news that people subsequently share. Together with additional computational analyses, these findings indicate that people often share misinformation because their attention is focused on factors other than accuracy—and therefore they fail to implement a strongly held preference for accurate sharing. Our results challenge the popular claim that people value partisanship over accuracy8,9, and provide evidence for scalable attention-based interventions that social media platforms could easily implement to counter misinformation online.

Similar content being viewed by others

Main

The sharing of misinformation on social media—including, but not limited to, blatantly false political ‘fake news’ and misleading hyperpartisan content—has become a major focus of public debate and academic study in recent years1,4. Although misinformation is nothing new, the topic gained prominence in 2016 after the US Presidential Election and the UK’s Brexit referendum, during which entirely fabricated stories (presented as legitimate news) received wide distribution via social media—a problem that has gained even more attention during the COVID-19 pandemic2,7 and the Capitol Hill riot following the 2020 US Presidential Election10.

Misinformation is problematic because it leads to inaccurate beliefs and can exacerbate partisan disagreement over even basic facts. Merely reading false news posts—including political posts that are extremely implausible and inconsistent with one’s political ideology— makes them subsequently seem more true11.

In addition to being concerning, the widespread sharing of misinformation on social media is also surprising, given the outlandishness of much of this content. Here we test three competing theories of why people share misinformation, based respectively on (i) confusion about what is (in)accurate, (ii) preferences for factors such as partisanship over accuracy, and (iii) inattention to accuracy.

Disconnect between sharing and accuracy

We begin with the confusion-based account, in which people share misinformation because they mistakenly believe that it is accurate (for example, owing to media or digital illiteracy5,12,13,14,15 or politically motivated reasoning8,9,16,17). To gain initial insight into whether mistaken beliefs are sufficient to explain the sharing of misinformation, study 1 tests for a dissociation between what people deem to be accurate and what they would share on social media. We recruited n = 1,015 American individuals using Amazon Mechanical Turk (MTurk)18, and presented them with the headline, lede sentence, and image for 36 actual news stories taken from social media. Half of the headlines were entirely false and half were true; half of the headlines were chosen (via pretest19,20) to be favourable to Democrats and the other half to be favourable to Republicans. Participants were randomly assigned to then either judge the veracity of each headline (accuracy condition) or indicate whether they would consider sharing each headline online (sharing condition) (for details, see Methods). Unless noted otherwise, all P values are generated by linear regression with robust standard errors clustered on participant and headline.

In the accuracy condition (Fig. 1a), true headlines were rated as accurate significantly more often than false headlines (55.9 percentage point difference, F(1, 36,172) = 375.05, P < 0.0001). Although politically concordant headlines were also rated as accurate significantly more often than politically discordant headlines (10.1 percentage point difference, F(1, 36,172) = 26.45, P < 0.0001), this difference based on partisan alignment was significantly smaller than the 55.9 percentage point veracity-driven difference between true and false headlines (F(1, 36,172) = 137.26, P < 0.0001). Turning to the sharing condition (Fig. 1b), we see the opposite pattern. Whether the headline was politically concordant or discordant had a significantly larger effect on sharing intentions (19.3 percentage points) than whether the headline was true or false (5.9 percentage points; F(1, 36,172) = 19.73, P < 0.0001). Accordingly, the effect of headline veracity was significantly larger in the accuracy condition than in the sharing condition (F(1, 36,172) = 260.68, P < 0.0001), whereas the effect of concordance was significantly larger in the sharing condition than in the accuracy condition (F(1, 36,172) = 17.24, P < 0.0001; for the full regression table and robustness checks, see Supplementary Information section 2). Notably, the pattern of sharing intentions that we observe here matches the pattern of actual sharing observed in a large-scale analysis of Twitter users, in which partisan alignment was found to be a much stronger predictor of sharing than veracity21.

In study 1, n = 1,002 American individuals from Amazon MTurk were presented with a set of 36 headlines and asked to indicate whether they thought the headlines were accurate or whether they would consider sharing them on social media. a, The fraction of headlines rated as accurate in the ‘accuracy’ condition, by the veracity of the headline and political alignment between the headline and the participant. Participants were significantly more likely to rate true headlines as accurate compared to false headlines (55.9 percentage point difference, F(1, 36,172) = 375.05, P < 0.0001), whereas the partisan alignment of the headlines had a significantly smaller effect (10.1 percentage point difference, F(1, 36,172) = 26.45, P < 0.0001; interaction, F(1, 36,172) = 137.26, P < 0.0001). b, The fraction of headlines that participants said they would consider sharing in the ‘sharing’ condition, by the veracity of the headline and political alignment between the headline and the participant. In contrast to the accuracy condition, the effect of headline veracity was significantly smaller in the sharing condition, F(1, 36,172) = 260.68, P < 0.0001, whereas the effect of political concordance was significantly larger, F(1, 36,172) = 17.24, P < 0.0001. Error bars indicate 95% confidence intervals based on robust standard errors clustered on participant and headline, and all P values were generated using linear regression with robust standard errors clustered on participant and headline. c, Participants nonetheless overwhelmingly said that they thought that accuracy was more important on average than partisanship (and all other content dimensions that we asked about) when making decisions about what to share on social media (data shown from study 2; for study 1 see Extended Data Fig. 1).

To illustrate the disconnect between accuracy judgments and sharing intentions, consider, for example, the following headline: ‘Over 500 ‘Migrant Caravaners’ Arrested With Suicide Vests’. This was rated as accurate by 15.7% of Republicans in our study, but 51.1% of Republicans said they would consider sharing it. Thus, the results from study 1 suggest that the confusion-based account cannot fully explain the sharing of misinformation: our participants were more than twice as likely to consider sharing false but politically concordant headlines (37.4%) as they were to rate such headlines as accurate (18.2%; F(1, 36,172) = 19.73, P < 0.0001).

One possible explanation for this dissociation between accuracy judgments and sharing intentions is offered by the preference-based account of misinformation sharing. By this account, people care about accuracy much less than other factors (such as partisanship), and therefore knowingly share misinformation. The fact that participants in study 1 were willing to share ideologically consistent but false headlines could thus be reasonably construed as revealing their preference for weighting non-accuracy dimensions (such as ideology) over accuracy. Yet when asked at the end of the study whether it is important to share only content that is accurate on social media, the modal response was “extremely important” (Extended Data Fig. 1). A similar pattern was observed in a more nationally representative sample of n = 401 American individuals from Lucid22 in study 2, who rated accuracy as substantially more important for social media sharing than any of the other dimensions that we asked about (paired t-tests, P < 0.001 for all comparisons) (Fig. 1c; for design details, see Methods).

Why, then, were the participants in study 1—along with millions of other American people in recent years—willing to share misinformation? In answer, we advance the inattention-based account, in which (i) people do care more about accuracy than other content dimensions, but accuracy nonetheless often has little effect on sharing, because (ii) the social media context focuses their attention on other factors such as the desire to attract and please followers/friends or to signal one’s group membership23,24,25. In the language of utility theory, we argue that an ‘attentional spotlight’ is shone upon certain terms in the decider’s utility function, such that only those terms are weighed when making a decision (for a mathematical formalization of this limited-attention utility model, see Supplementary Information section 3).

Priming accuracy improves sharing

We differentiate between these theories by subtly inducing people to think about accuracy, which the preference-based account predicts should have no effect whereas the inattention-based account predicts should increase the accuracy of content that is shared (see Supplementary Information section 3.2). We first test these competing predictions by performing a series of survey experiments with similar designs. In the control condition of each experiment, participants were shown 24 news headlines (balanced on veracity and partisanship, as in study 1) and asked how likely they would be to share each headline on Facebook. In the treatment condition, participants were asked to rate the accuracy of a single non-partisan news headline at the outset of the study (ostensibly as part of a pretest for stimuli for another study). They then went on to complete the same sharing intentions task as in the control condition, but with the concept of accuracy more likely to be salient in their minds. For details of the experimental design, see Methods.

In two experiments using American individuals recruited from MTurk (study 3, n = 727; study 4, n = 780), we find that the treatment condition significantly increased sharing discernment (interaction between headline veracity and treatment: study 3, b = 0.053, 95% confidence interval [0.032, 0.074], F(1, 17,413) = 24.21, P < 0.0001; study 4, b = 0.065, 95% confidence interval [0.036, 0.094], F(1, 18,673) = 19.53, P < 0.0001) (Fig. 2a, b). Specifically, participants in the treatment group were significantly less likely to consider sharing false headlines compared to those in the control group (study 3, b = −0.055, 95% confidence interval [−0.083, −0.026], F(1, 17,413) = 14.08, P = 0.0002; study 4, b = −0.058, 95% confidence interval [−0.091, −0.025], F(1, 18,673) = 11.99, P = 0.0005), but equally likely to consider sharing true headlines (study 3, b = −0.002, 95% confidence interval [−0.031, 0.028], F(1, 17,413) = 0.01, P = 0.92; study 4, b = 0.007, 95% confidence interval [−0.020, 0.033], F(1, 18,673) = 0.23, P = 0.63). As a result, sharing discernment (the difference in sharing intentions for true versus false headlines) was 2.0 times larger in the treatment relative to the control group in study 3, and 2.4 times larger in study 4. Furthermore, there was no evidence of a backfire effect, as the treatment effect was actually significantly larger for politically concordant headlines than for politically discordant headlines (b = 0.022, 95% confidence interval [0.012, 0.033], F(1, 36,078) = 18.09, P < 0.0001), and significantly increased discernment for both Democrats (b = 0.069, 95% confidence interval [0.048, 0.091], F(1, 24,636) = 40.38, P < 0.0001) and Republicans (b = 0.035, 95% confidence interval [0.007, 0.063], F(1, 11,394) = 5.93, P = 0.015). See Supplementary Information section 2 for the full regression table.

a–c, Participants in study 3 (a; n = 727 American individuals from MTurk), study 4 (b; n = 780 American individuals from MTurk) and study 5 (c; n = 1,268 American individuals from Lucid, quota-matched to the national distribution on age, gender, ethnicity and geographical region) indicated how likely they would be to consider sharing a series of actual headlines from social media. Participants in the ‘treatment’ condition rated the accuracy of a single non-political headline at the outset of the study, thus increasing the likelihood that they would think about accuracy when indicating sharing intentions relative to the ‘control’ condition. In study 5, we added an ‘active control’ (in which participants rated the humorousness of a single headline at the outset of the study) and an ‘importance treatment’ (in which participants were asked at the study outset how important they thought it was to share only accurate content). For interpretability, shown here is the fraction of ‘likely’ responses (responses above the midpoint of the six-point Likert scale) by condition and headline veracity; the full distributions of responses are shown in Extended Data Figs. 2, 3. As per our preregistered analysis plans, these analyses focus only on participants who indicated that they sometimes consider sharing political content on social media; for analysis including all participants, see Supplementary Information section 2. Error bars indicate 95% confidence intervals based on robust standard errors clustered on participant and headline.

Notably, there was no significant difference between conditions in responses to a post-experimental question about the importance of sharing only accurate content (t-test: t(1498) = 0.42, P = 0.68, 95% confidence interval [−0.075, 0.115] points on a 1–5 scale; Bayesian independent samples t-test with Cauchy prior distribution with interquartile range of 0.707: BF10 = 0.063, providing strong evidence for the null), or regarding participants’ perceptions of the importance that their friends place on sharing only accurate content (t-test: t(768) = −0.57, P = 0.57, 95% confidence interval [−0.205, 0.113] points on a 1–5 scale; Bayesian independent samples t-test with Cauchy prior distribution with interquartile range of 0.707: BF10 = 0.095, providing strong evidence for the null).

Our next survey experiment (study 5, n = 1,268) tested whether the previous results generalize to a more representative sample by recruiting participants from Lucid22 that were quota-sampled to match the distribution of American residents on age, gender, ethnicity and geographical region. Study 5 also included an active control condition in which participants were asked to rate the humorousness (rather than accuracy) of a single non-partisan news headline at the outset of the study, and an importance treatment condition that tested another approach for making accuracy salient by having participants begin the study by indicating the importance they place on sharing only accurate content (instead of rating the accuracy of a neutral headline). The results (Fig. 2c) successfully replicated studies 3 and 4. As expected, there were no significant differences in sharing intentions between the control and the active control conditions (interaction between veracity and condition, b = 0.015, 95% confidence interval [−0.043, 0.059], F(1, 6,772) = 0.04, P = 0.84); and both treatments significantly increased sharing discernment relative to the controls (interaction between veracity and condition: treatment, b = 0.054, 95% confidence interval [0.023, 0.085], F = 11.98, P = 0.0005; importance treatment, b = 0.038, 95% confidence interval [0.014, 0.061], F = 9.76, P = 0.0018). See Supplementary Information section 2 for the full regression table.

Attending to accuracy as the mechanism

Next, we provide evidence that shifting attention to accuracy is the mechanism behind this effect by showing that the treatment condition leads to the largest reduction in the sharing of headlines that participants are likely to deem to be the most inaccurate (and vice versa for the most plainly accurate headlines). A headline-level analysis finds a positive correlation between the effect of the treatment on sharing and the headline’s perceived accuracy (as measured in pre-tests, see Supplementary Information section 1) (study 3, r(22) = 0.71, P = 0.0001; study 4, r(22) = 0.67, P = 0.0003; study 5, r(18) = 0.61, P = 0.005) (Fig. 3a–c). That is, the most obviously inaccurate headlines are the ones that the accuracy salience treatment most effectively discourages people from sharing.

a–c, There is a significant positive correlation across headlines between perceived accuracy (on the basis of pre-tests) and the effect of the treatment on sharing in study 3 (a, r(22) = 0.71, P = 0.0001), study 4 (b, r(22) = 0.67, P = 0.0003), and study 5 (c, r(18) = 0.61, P = 0.005). The accuracy reminder caused a larger decrease in sharing intentions for items that were deemed to be more unlikely. This observation supports our argument that the treatment intervention operated via focusing attention on accuracy, and that many people do not want to share content that they think is inaccurate. As shown in Extended Data Fig. 4, in study 5 a similar pattern was found for the ‘important treatment’, and no such effect existed for the ‘active control’. d, In study 6, participants rated the accuracy of each headline (a full-attention treatment) before making a judgment about sharing. This allows us to distinguish between false items that: (i) participants share despite believing to be inaccurate (that is, a preference-based rejection of truth); (ii) participants share and also believe to be accurate (that is, confusion-based); and (iii) participants no longer shared once they considered accuracy (that is, inattention-based). Results indicate that, among the false headlines that are shared in the control group, most are shared owing to inattention (51.2%), fewer are shared because of confusion (33.1%), and a small minority are shared because of a preference to share false content (15.8%). Bootstrapping simulations (10,000 repetitions) showed that inattention explains significantly more sharing than purposeful sharing (b = 0.354 [0.178, 0.502], P = 0.0004); that confusion explains significantly more sharing than purposeful sharing (b = 0.173 [0.098, 0.256], P < 0.0001); and that although inattention explained directionally more misinformation sharing than confusion, this difference was not statistically significant (b = 0.181 [−0.036, 0.365], P = 0.098).

Furthermore, fitting our formal limited-attention utility model to the experimental data provides quantitative evidence against the preference-based account (participants value accuracy as much as or more than partisanship) and for the inattention-based account (participants often do not consider accuracy) (Extended Data Table 1, Supplementary Information sections 3.5, 3.6).

In study 6, we carried out a final survey experiment (n = 710 American individuals from MTurk) that quantifies the relative contribution of the confusion-based, preference-based and inattention-based accounts to the willingness to share false headlines on social media. To do so, we compare the control condition to a ‘full attention’ treatment, in which participants are asked to assess the accuracy of each headline immediately before deciding whether they would share it (for details, see Methods). As illustrated in Fig. 3d, the results show that, of the sharing intentions for false headlines, the inattention-based account explains 51.2% (95% confidence interval [38.4%, 62.0%]) of sharing, the confusion-based account explains 33.1% (95% confidence interval [25.1%, 42.4%]) of sharing, and the preference-based account explains 15.8% (95% confidence interval [11.1%, 21.5%]) of sharing. Thus, inattention does not merely operate on the margin, but instead has a central role in the sharing of misinformation in our experimental paradigm. Furthermore, the preference-based account’s low level of explanatory power relative to the inattention-based account in study 6 is consistent with the model fitting results in Extended Data Table 1 and Supplementary Information section 3.6 described above—thus providing convergent evidence against the preference-based account being a central driver of misinformation sharing.

Deploying the intervention on Twitter

Finally, to test whether our findings generalize to natural social media use settings (rather than laboratory experiments), actual (rather than hypothetical) sharing decisions, and misinformation more broadly (rather than just blatant ‘fake news’), in study 7 we conducted a digital field experiment on social media26. To do so, we selected n = 5,379 Twitter users who had previously shared links to two particularly well-known right-leaning sites that professional fact-checkers have rated as highly untrustworthy27: www.Breitbart.com and www.Infowars.com. We then sent these users private messages asking them to rate the accuracy of a single non-political headline (Fig. 4a). We used a stepped-wedge design to observe the causal effect of the message on the quality of the news content (on the basis of domain-level ratings of professional fact-checkers27) that the users shared in the 24 hours after receiving our intervention message. For details of the experimental design, see Methods.

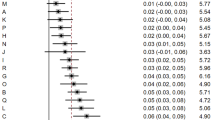

In Study 7, we conducted an experiment on the Twitter platform involving n = 5,379 users who had recently shared links to websites that regularly produce misleading and hyperpartisan content. We randomized the date on which users were sent an unsolicited message asking them to rate the accuracy of a single non-political headline. We then compared the quality of the news sites shared in the 24 h after receiving the message to the sites shared by participants who had not yet received the message. a, The private message sent to the users is shown here. We did not expect most users to respond to the message, or even read it in its entirety. Thus, we designed it such that reading only the top line should be sufficient to shift attention to the concept of accuracy. b, To test the robustness of our results, we conducted 192 analyses that differed in their dependent variable, inclusion criteria and model specifications. Shown here is the distribution of P values resulting from each of these analyses. More than 80% of approaches yield P < 0.05. c, A domain-level analysis provides a more detailed picture of the effect of the intervention. The x axis indicates the trust score given to each outlet by professional fact-checkers27. The y axis indicates the fraction of rated links to each outlet in the 24 h after the intervention minus the fraction of links to each outlet among not-yet-treated users. The size of each dot is proportional to the number of pre-treatment posts with links to that outlet. Domains with more than 500 pre-treatment posts are labelled. NYPost, New York Post; NYTimes, New York Times; WashPo, Washington Post; WSJ, Wall Street Journal.

Examining baseline (pre-treatment) sharing behaviour shows that we were successful in identifying users with relatively low-quality news-sharing habits. The average quality score of news sources from pre-treatment posts was 0.34. (For comparison, the fact-checker-based quality score was 0.02 for Infowars; 0.16 for Breitbart; 0.39 for Fox News, and 0.93 for the New York Times.) Moreover, 46.6% of shared news sites were sites that publish false or misleading content (0.9% fake news sites, 45.7% hyperpartisan sites).

Consistent with our survey experiments, we find that the single accuracy message made users more discerning in their subsequent sharing decisions (using Fisherian randomization inference28 to calculate exact P values, PFRI, based on the distribution of the t statistic under the null hypothesis). Relative to baseline, the accuracy message increased the average quality of the news sources shared (b = 0.007, t(5375) = 2.91, 95% null acceptance region of t [−0.44, 2.59], PFRI = 0.009) and the total quality of shared sources summed over all posts (b = 0.014, t(5375) = 3.12, 95% null acceptance region of t [−0.08, 2.90], PFRI = 0.011). This translates into increases of 4.8% and 9.0%, respectively, when estimating the treatment effect for user-days on which tweets would occur in treatment (that is, excluding user-days in the ‘never-taker’ principal stratum29,30, because the treatment cannot have an effect when no tweets would occur in either treatment or control); including user-days with no tweets yields an increase of 2.1% and 4.0% in average and total quality, respectively. Furthermore, the level of sharing discernment (that is, the difference in number of mainstream versus fake or hyperpartisan links shared per user-day; interaction between post-treatment dummy and link type) was 2.8 times higher after receiving the accuracy message (b = 0.059, t(5371) = 3.27, 95% null acceptance region of t [−0.31, 2.67], PFRI = 0.003).

To provide further support for the inattention-based account, we contrast lower-engagement sharing (in which the user simply re-shares content posted by another user: that is, retweets without comment) with higher-engagement sharing (in which the poster invests some time and effort to craft their own post or add a comment when sharing another post). Lower-engagement sharing, which accounts for 72.4% of our dataset, presumably involves less attention than higher-engagement sharing—therefore the inattention-based account of misinformation sharing predicts that our manipulation should primarily affect lower-engagement sharing. Consistent with this prediction, we observe a significant positive interaction (b = 0.008, t(5371) = 2.78, 95% null acceptance region of t [−0.80, 2.24], PFRI = 0.004), such that the treatment increases average quality of lower-engagement sharing but not higher-engagement sharing. Furthermore, we found no significant treatment effect on the number of posts without links to any of the news sites used in our main analyses (b = 0.266, t(5375) = 0.50, 95% null acceptance region of t [−1.11, 1.64], PFRI = 0.505).

Notably, the significant effects that we observed are not unique to one particular set of analytic choices. Figure 4b shows the distribution of P values observed in 192 different analyses assessing the overall treatment effect on average quality, summed quality, or discernment under a variety of analytic choices. Of these analyses, 82.3% indicate a significant positive treatment effect (and none of 32 analyses of posts without links to a news site—in which we would not expect a treatment effect—find a significant difference). For details, see Extended Data Table 4 and Supplementary Information section 5.

Finally, we examine the data at the level of the domain (Fig. 4c). We see that the treatment effect is driven by increasing the fraction of rated-site posts with links to mainstream new sites with strong editorial standards such as the New York Times, and decreasing the fraction of rated-site posts that linked to relatively untrustworthy hyperpartisan sites such as Breitbart. Indeed, a domain-level pairwise correlation between fact-checker rating and change in sharing due to the intervention shows a very strong positive relationship (domains weighted by number of pre-treatment posts; r(44) = 0.74, P < 0.0001), replicating the pattern observed in the survey experiments (Fig. 3a–c). In summary, our accuracy message successfully induced Twitter users who regularly shared misinformation to increase the average quality of the news that they shared.

In Supplementary Information section 6, we use computational modelling to connect our empirical observations about individual-level sharing decisions in studies 3–7 to the network-level dynamics of misinformation spread. Across a variety of network structures, we observe that network dynamics can substantially amplify the magnitude of treatment effects on sharing (Extended Data Fig. 6). Improving the quality of the content shared by one user improves the content that their followers see, and therefore improves the content that their followers share. This in turn improves what the followers’ followers see and share, and so on. Thus, the cumulative effects of such an intervention on how misinformation spreads across networks may be substantially larger than what is observed when only examining the treated individuals—particularly given that, in study 7, we find that the treatment is as effective, if not more so, for users with larger numbers of followers (see Supplementary Information section 5); and that our treatment effect size estimates in study 7 are conservative because we do not know when (or if) users actually saw our intervention message.

Conclusion

Together, these studies suggest that when deciding what to share on social media, people are often distracted from considering the accuracy of the content. Therefore, shifting attention to the concept of accuracy can cause people to improve the quality of the news that they share. Furthermore, we found a dissociation between accuracy judgments and sharing intentions that suggests that people may share news that they do not necessarily have a firm belief in. As a consequence, people’s beliefs may not be as partisan as their social media feeds seem to indicate. Future work is needed to more precisely identify people’s state of belief when not reflecting on accuracy. Is it that people hold no particular belief one way or the other, or that they tend to assume content is true by default31?

A substantial limitation of our studies is that they are focused on the sharing of political news among American individuals. In a recent set of follow-up survey experiments, our findings of a disconnect between accuracy and sharing judgments in study 1 and our treatment increasing sharing discernment in studies 3, 4 and 5 were successfully replicated using headlines about COVID-19 with quota-matched American samples7. Future work should examine applications to other content domains, including organized misinformation campaigns from political elites (such as about climate change32 or fraud in the 2020 US Presidential Election10), and explore cross-cultural generalizability. Extending the Twitter field experiment design used in study 7 is also a promising direction for future work, including using a more continuous shock-based model of how (and when) the treatment affects individuals rather than the conservative intent-to-treat approach used here, examining more than 24 hours after the intervention, generalizing beyond users who follow-back experimenter accounts, testing an active control, and using article-level quality rather than domain-level quality scores.

Our results suggest that the current design of social media platforms—in which users scroll quickly through a mixture of serious news and emotionally engaging content, and receive instantaneous quantified social feedback on their sharing—may discourage people from reflecting on accuracy. But this need not be the case. Our treatment translates easily into interventions that social media platforms could use to increase users’ focus on accuracy. For example, platforms could periodically ask users to rate the accuracy of randomly selected headlines, thus reminding them about accuracy in a subtle way that should avoid reactance33 (and simultaneously generating useful crowd ratings that can help to identify misinformation27,34). Such an approach could potentially increase the quality of news circulating online without relying on a centralized institution to certify truth and censor falsehood.

Methods

Preregistrations for all studies are available at https://osf.io/p6u8k/. In all survey experiments, we do not exclude participants for inattentiveness or straightlining to avoid selection effects that can undermine causal inference. The researchers were not blinded to the hypotheses when carrying out the analyses. All experiments were randomized except for study 2, which was not randomized. No statistical methods were used to predetermine sample size.

Study 1

In study 1, participants were presented with a pretested set of false and true headlines (in ‘Facebook format’) and were asked to indicate either whether they thought they were accurate or not, or whether they would consider sharing them on social media or not. Our prediction was that the difference in ‘yes’ responses between false and true news (that is, discernment) will be greater when individuals are asked about accuracy than when they are asked about sharing, whereas the difference between politically discordant and concordant news will be greater when they are asked about sharing than when they are asked about accuracy.

Participants

We preregistered a target sample of 1,000 complete responses, using participants recruited from Amazon’s MTurk but noted that we would retain individuals who completed the study above the 1,000-participant quota. In total, 1,825 participants began the survey. However, an initial (pre-treatment) screener only allowed American participants who indicated having a Facebook or Twitter account (when shown a list of different social media platforms) and indicated that they would consider sharing political content (when shown a list of different content types) to continue and complete the survey. The purpose of these screening criteria was to focus our investigation on the relevant subpopulation—those who share political news. The accuracy judgments of people who never share political news on social media are not relevant here, given our interest in the sharing of political misinformation. Of the participants who entered the survey, 153 indicated that they had neither a Facebook nor a Twitter account, and 651 indicated that they did have either a Facebook or Twitter account but would not consider sharing political content. A further 16 participants passed the screener but did not finish the survey and thus were removed from the dataset. The full sample (mean age = 36.7) included 475 males, 516 females, and 14 participants who selected another gender option. This study was run on 13–14 August 2019.

Materials

We presented participants with 18 false and 18 true news headlines in a random order for each participant. The false news headlines were originally selected from a third-party fact-checking website, www.Snopes.com, and were therefore verified as being fabricated and untrue. The true news headlines were all accurate and selected from mainstream news outlets to be roughly contemporary with the false news headlines. Moreover, the headlines were selected to be either pro-Democratic or pro-Republican (and equally so). This was done using a pretest, which confirmed that the headlines were equally partisan across the categories (similar approaches have been described previously11,19,20). See Supplementary Information section 1 for details about the pretest.

Participants in study 1 were also asked: ‘How important is it to you that you only share news articles on social media (such as Facebook and Twitter) if they are accurate?’, to which they responded on a five-point scale from ‘not at all important’ to ‘extremely important’. We also asked participants about their frequency of social media use, along with several exploratory questions about media trust. At the end of the survey, participants were asked whether they responded randomly at any point during the survey or searched for any of the headlines online (for example, via Google). As noted in our preregistration, we did not intend to exclude these individuals. Participants also completed several additional measures as part of separate investigations (this was also noted in the preregistration); namely, the seven-item cognitive reflection test19, a political knowledge questionnaire, and the positive and negative affective schedule35. In addition, participants were asked several demographic questions (age, gender, education, income, and a variety of political and religious questions). The most central political partisanship question was ‘Which of the following best describes your political preference?’ followed by the following response options: strongly Democratic; Democratic; lean Democratic; lean Republican; Republican; and strongly Republican. For purposes of data analysis, this was converted to a Democratic or Republican binary variable. The full survey is available online in both text format and as a Qualtrics file, along with all data (https://osf.io/p6u8k/).

Procedure

Participants in the accuracy condition were given the following instructions: ‘You will be presented with a series of news headlines from 2017 to 2019 (36 in total). We are interested in whether you think these headlines describe an event that actually happened in an accurate and unbiased way. Note: the images may take a moment to load.’ In the sharing condition, the middle sentence was replaced with ‘We are interested in whether you would consider sharing these stories on social media (such as Facebook or Twitter)’. We then presented participants with the full set of headlines in a random order. In the accuracy condition, participants were asked ‘To the best of your knowledge, is this claim in the above headline accurate?’ In the sharing condition, participants were asked ‘Would you consider sharing this story online (for example, through Facebook or Twitter)?’ Although these sharing decisions are hypothetical, headline-level analyses suggest that self-report sharing decisions of news articles such as those used in our study correlate strongly with actual sharing on social media36.

In both conditions, the response options were simply ‘no’ and ‘yes’. Moreover, participants saw the response options listed as either yes/no or no/yes (randomized across participants—that is, an individual participant only ever saw ‘yes’ first or ‘no’ first).

This study was approved by the University of Regina Research Ethics Board (Protocol 2018-116).

Analysis plan

Our preregistration specified that all analyses would be performed at the level of the individual item (that is, one data point per item per participant; 0 = no, 1 = yes) using linear regression with robust standard errors clustered on participant. However, we subsequently realized that we should also be clustering standard errors on headline (as multiple ratings of the same headline are non-independent in a similar way to multiple ratings from the same participant), and thus deviated from the preregistrations in this minor way (all key results are qualitatively equivalent if only clustering standard errors on participant). The linear regression was preregistered to have the following independent variables: a condition dummy (−0.5 = accuracy, 0.5 = sharing), a news type dummy (−0.5 = false, 0.5 = true), a political concordance dummy (−0.5 = discordant, 0.5 = concordant), and all two-way and three-way interactions. (Political concordance is defined based on the match between content and ideology; specifically, political concordant = pro-Democratic [pro-Republican] news (based on a pretest) for American individuals who prefer the Democratic [Republican] party over the Republican [Democratic]. Politically discordant is the opposite.) Our key prediction was that there would be a negative interaction between condition and news type, such that the difference between false and true is smaller in the sharing condition than the accuracy condition. A secondary prediction was that there would be a positive interaction between condition and concordance, such that the difference between concordant and discordant is larger in the sharing condition than the accuracy condition. We also said we would check for a three-way interaction, and use a Wald test of the relevant net coefficients to test how sharing likelihood of false concordant headlines compares to true discordant headlines. Finally, as robustness checks, we said we would repeat the main analysis using logistic regression instead of linear regression, and using ratings that are z-scored within condition.

Study 2

Study 2 extended the observation of study 1 that most people self-report that it is important to not share accuracy information on social media. First, study 2 assesses the relative, in addition to absolute, importance placed on accuracy by also asking about the importance of various other factors. Second, study 2 tested whether the results of study 1 would generalize beyond MTurk by recruiting participants from Lucid for Academics, delivering a sample that matches the distribution of American residents on age, gender, ethnicity and geographical region. Third, study 2 avoided the potential spillover effects from study 1 condition assignment suggested in Extended Data Fig. 1 by not having participants complete a task related to social media beforehand.

In total, 401 participants (mean age of 43.7) completed the survey on 9–12 January 2020, including 209 males and 184 females, and 8 indicating other gender identities. Participants were asked ‘When deciding whether to share a piece of content on social media, how important is it to you that the content is...’ and then were given a response grid where the columns were labelled ‘not at all’, ‘slightly’, ‘moderately’, ‘very’, and ‘extremely’, and the rows were labelled ‘accurate’, ‘surprising’, ‘interesting’, ‘aligned with your politics’ and ‘funny’.

This study was approved by the MIT COUHES (protocol 1806400195).

Studies 3, 4 and 5

In studies 3, 4, and 5 we investigate whether subtly shifting attention to accuracy increases the veracity of the news people are willing to share. In particular, participants were asked to judge the accuracy of a single (politically neutral) news headline at the beginning of the study, ostensibly as part of a pretest for another study. We then tested whether this accuracy-cue affects the tendency of individuals to discern between false and true news when making subsequent judgments about social media sharing. The principal advantage of this design is that the manipulation is subtle and not explicitly linked to the main task. Thus, although social desirability bias may lead people to underreport their likelihood of sharing misinformation overall, it is unlikely that any between-condition difference is driven by participants believing that the accuracy question at the beginning of the treatment condition was designed to make them take accuracy into account when making sharing decisions during the main experiment. It is therefore relatively unlikely that any treatment effect on sharing would be due to demand characteristics or social desirability.

The only difference between studies 3 and 4 was the set of headlines used, to demonstrate the generalizability of these findings. Study 5 used a more representative sample and included an active control condition and a second treatment condition that primed accuracy concerns in a different way. Studies 3 and 4 were approved by the Yale University Committee for the Use of Human Subjects (IRB protocol 1307012383). Study 5 was approved by the University of Regina Research Ethics Board (protocol 2018-116).

Participants

In study 3, we preregistered a target sample of 1,200 participants from MTurk. In total, 1,254 participants began the survey between 4–6 October 2017. However, 21 participants reporting not having a Facebook profile at the outset of the study and, as per our preregistration, were not allowed to proceed; and 71 participants did not complete the survey. The full sample (mean age of 33.7) included 453 males, 703 females, and 2 who did not answer the question. Following the main task, participants were asked whether they ‘would ever consider sharing something political on Facebook’ and were given the following response options: ‘yes’, ‘no’, and ‘I don’t use social media’. As per our preregistration, only participants who selected ‘yes’ to this question were included in our main analysis. This excluded 431 people and the sample of participants who would consider sharing political content (mean age of 34.5) included 274 males, 451 females, and 2 who did not answer the gender question.

In study 4, we preregistered a target sample of 1,200 participants from MTurk. In total, 1,328 participants began the survey between 28–30 November 2017. However, 8 participants did not report having a Facebook profile and 72 participants did not finish the survey. The full sample (mean age of 33.3) included 490 males, 757 females, and 1 who did not answer the question. Restricting to participants were responded ‘Yes’ when asked whether they ‘would ever consider sharing something political on Facebook’ excluded 468 people, such that the sample of participants who would consider sharing political content (mean age of 33.6) included 282 males, 497 females, and 1 who did not answer the gender question.

In study 5, we preregistered a target sample of 1,200 participants from Lucid. In total, 1,628 participants began the survey between 30 April and 1 May 2019. However, 236 participants reported not having a Facebook profile (and thus were not allowed to complete the survey) and 105 participants did not finish the survey. The full sample (mean age of 45.5) included 626 males and 661 females. Restricting to participants were responded ‘yes’ when asked whether they ‘would ever consider sharing something political on Facebook’ excluded 616 people, such that the sample of participants who would consider sharing political content (mean age of 44.3) included 333 males and 338 females.

Unlike in study 1, because the question about ever sharing political content was asked after the experimental manipulation (rather than at the outset of the study), there is the possibility that excluding participants who responded ‘no’ may introduce selection effects and undermine causal inference37. Although there was no significant difference in responses to this political sharing question between conditions in any of the three accuracy priming experiments (χ2 test; study 3: χ2 (1, n = 1,158) = 0.156, P = 0.69; study 4: χ2 (1, n = 1,248) = 0.988, P = 0.32; study 5, χ2 (3, n = 1,287) = 2.320, P = 0.51), for completeness we show that the results are robust to including all participants (see Supplementary Information section 2).

Materials

In study 3, we presented participants with 24 news headlines from ref. 20; in study 4, we presented participants with a different set of 24 news headlines selected via pretest; and in study 5, we presented participants with yet another set of 20 news headlines selected via pretest. In all studies, half of the headlines were false (selected from a third-party fact-checking website, www.Snopes.com, and therefore verified as being fabricated and untrue) and the other half were true (accurate and selected from mainstream news outlets to be roughly contemporary with the false news headlines). Moreover, half of the headlines were pro-Democratic or anti-Republican and the other half were pro-Republican or anti-Democrat (as determined by the pretests). See Supplementary Information section 1 for further details on the pretests.

As in study 1, after the main task, participants in studies 3–5 were asked about the importance of sharing only accurate news articles on social media (study 4 also asked about the importance participants’ friends placed on sharing only accurate news on social media). Participants then completed various exploratory measures and demographics. The demographics included the question ‘If you absolutely had to choose between only the Democratic and Republican party, which would do you prefer?’ followed by the following response options: Democratic Party or Republican Party. We use this question to classify participants as Democrats versus Republicans.

Procedure

In all three studies, participants were first asked whether they had a Facebook account, and those who did not were not permitted to complete the study. Participants were then randomly assigned to one of two conditions in studies 3 and 4, and one of four conditions in study 5.

In the ‘treatment’ condition of all three studies, participants were given the following instructions: ‘First, we would like to pretest an actual news headline for future studies. We are interested in whether people think it is accurate or not. We only need you to give your opinion about the accuracy of a single headline. We will then continue on to the primary task. Note: the image may take a moment to load.’ Participants were then shown a politically neutral headline and were asked: ‘To the best of your knowledge, how accurate is the claim in the above headline?’ and were given the following response scale: ‘not at all accurate’, ‘not very accurate’, ‘somewhat accurate’, ‘very accurate’. One of two politically neutral headlines (1 true, 1 false) was randomly selected in studies 3 and 4; one of four politically neutral headlines (2 true, 2 false) was randomly selected in study 5.

In the ‘active control’ condition of study 5, participants were instead given the following instructions: ‘First, we would like to pretest an actual news headline for future studies. We are interested in whether people think it is funny or not. We only need you to give your opinion about the funniness of a single headline. We will then continue on to the primary task. Note: the image may take a moment to load.’ They were then presented with one of the same four neutral news headlines used in the treatment condition and asked: ‘In your opinion, is the above headline funny, amusing, or entertaining?’. (Response options: extremely unfunny; moderately unfunny; slightly unfunny; slightly funny; moderately funny; extremely funny.)

In the ‘importance treatment’ condition of study 5, participants were instead asked the following question at the outset of the study: ‘Do you agree or disagree that ‘it is important to only share news content on social media that is accurate and unbiased’?’. (Response options: strongly agree to strongly disagree.)

In the ‘control’ condition of all three studies, participants received no initial instructions and proceeded directly to the next step.

Participants in all conditions were then told: ‘You will be presented with a series of news headlines from 2016 and 2017 (24 in total) [2017 and 2018 (20 in total) for study 5]. We are interested in whether you would be willing to share the story on Facebook. Note: The images may take a moment to load.’ They then proceeded to the main task in which they were presented with the true and false headlines and for each were asked ‘If you were to see the above article on Facebook, how likely would you be to share it’ and given the following response scale: ‘extremely unlikely, moderately unlikely, slightly unlikely, slightly likely, moderately likely, extremely likely’. We used a continuous scale, instead of the binary scale used in study 1, to increase the sensitivity of the measure.

Analysis plan

Our preregistrations specified that all analyses would be performed at the level of the individual item (that is, one data point per item per participant, with the six-point sharing Likert scale rescaled to the interval [0, 1]) using linear regression with robust standard errors clustered on participant. However, we subsequently realized that we should also be clustering standard errors on headline (as multiple ratings of the same headline are non-independent in a similar way to multiple ratings from the same participant), and thus deviated from the preregistrations in this minor way (all key results are qualitatively equivalent if only clustering standard errors on participant).

In studies 3 and 4, the key preregistered test was an interaction between a condition dummy (0 = control, 1 = treatment) and a news veracity dummy (0 = false, 1 = true). This was to be followed-up by tests for simple effects of news veracity in each of the two conditions; and, specifically, the effect was predicted to be larger in the treatment condition. We also planned to test for simple effects of condition for each of the two types of news; and, specifically, the effect was predicted to be larger for false relative to true news. We also conducted a post hoc analysis using a linear regression with robust standard errors clustered on participant and headline to examine the potential moderating role of a dummy for the participant’s partisanship (preference for the Democratic versus Republican party) and a dummy for the headline’s political concordance (pro-Democratic [pro-Republican] headlines scored as concordant for participants who preferred the Democratic [Republican] party; pro-Republican [pro-Democratic] headlines scored as discordant for participants who preferred the Democratic [Republican] party). For ease of interpretation, we z-scored the partisanship and concordance dummies, and then included all possible interactions in the regression model. To maximize statistical power for these moderation analyses, we pooled the data from studies 3 and 4.

In study 5, the first preregistered test was to compare whether the active and passive control conditions differed, by testing for significant a main effect of condition (0 = passive, 1 = active), or significant interaction between condition and news veracity (0 = false, 1 = true). If these did not differ, we preregistered that we would combine the two control conditions for subsequent analyses. We would then test whether the two treatment conditions differ from the control condition(s) by testing for an interaction between dummies for each treatment (0 = passive or active control, 1 = treatment being tested) and news veracity. This was to be followed-up by tests for simple effects of news veracity in each of the conditions; and, specifically, the effect was predicted to be larger in the treatment conditions. We also planned to test for simple effects of condition for each of the two types of news; and, specifically, the effect was predicted to be larger for false relative to true news.

Study 6

Studies 3, 4 and 5 found that a subtle reminder of the concept of accuracy decreased sharing of false (but not true) news. In study 6, we instead use a full-attention treatment that directly forces participants to consider the accuracy of each headline before deciding whether to share it. This allows us to determine, within this particular context, the maximum effect that can be obtained by focusing attention on accuracy. Furthermore, using the accuracy ratings elicited in the full-attention treatment, we can determine what fraction of shared content was believed to be accurate versus inaccurate by the sharer. Together, these analyses allow us to infer the fraction of sharing of false content that is attributable to inattention, confusion about veracity, and purposeful sharing of falsehood.

This study was approved by the Yale University Committee for the Use of Human Subjects (IRB protocol 1307012383).

Participants

We combine two rounds of data collection on MTurk, the first of which had 218 participants begin the study on 11 August 2017, and the second of which had 542 participants begin the study on 24 August 2017, for a total of 760 participants. However, 14 participants did not report having a Facebook profile and 33 participants did not finish the survey. The full sample (mean age of 34.0) included 331 males, 376 females, and 4 who did not answer the question. Participants were asked whether they ‘would ever consider sharing something political on Facebook’ and were given the following response options: ‘yes’, ‘no’, ‘I don’t use social media’. Only participants who selected ‘yes’ to this question were included in our main analysis, as in our other studies (there was no significant difference in responses between conditions, χ2(2) = 1.07, P = 0.585). This excluded 313 people and the final sample (mean age of 35.2) included 181 males, 213 females, and 4 who did not answer the gender question. For robustness, we also report analyses including all participants; see Extended Data Table 2.

Materials

We presented participants with the same 24 headlines used in study 3.

Procedure

Participants were first asked if they have a Facebook account and those who did not were not permitted to complete the study. Participants were then randomly assigned to one of two conditions. In the full-attention treatment condition, participants were given the following instructions: ‘You will be presented with a series of news headlines from 2016 and 2017 (24 in total). We are interested in two things: (i) Whether you think the headlines are accurate or not. (ii) Whether you would be willing to share the story on Facebook. Note: the images may take a moment to load.’ In the control condition, participants were told: ‘You will be presented with a series of news headlines from 2016 and 2017 (24 in total). We are interested in whether you would be willing to share the story on Facebook. Note: the images may take a moment to load.’ Participants in both conditions were asked ‘If you were to see the above article on Facebook, how likely would you be to share it’ and given the following response scale: ‘extremely unlikely’, ‘moderately unlikely’, ‘slightly unlikely’, ‘slightly likely’, ‘moderately likely’, ‘extremely likely’. Crucially, in the treatment condition, before being asked the social media sharing question, participants were asked: ‘To the best of your knowledge, how accurate is the claim in the above headline?’ and given the following response scale: ‘not at all accurate’, ‘not very accurate’, ‘somewhat accurate’, ‘very accurate’.

Analysis

The goal of our analyses is to determine what fraction of the sharing of false headlines is attributable to confusion (incorrectly believing the headlines are accurate), inattention (forgetting to consider the accuracy of the headlines; as per the inattention-based account), and purposeful sharing of false content (as per the preference-based account). We can do so by using the sharing intentions in both conditions, and the accuracy judgments in the ‘full-attention’ treatment (no accuracy judgments were collected in the control). Because participants in the full-attention treatment are forced to consider the accuracy of each headline before deciding whether they would share it, inattention to accuracy is entirely eliminated in the full-attention treatment. Thus, the difference in sharing of false headlines between control and full-attention treatment indicates the fraction of sharing in control that was attributable to inattention. We can then use the accuracy judgments to determine how much of the sharing of false headlines in the full-attention treatment was attributable to confusion (indicated by the fraction of shared headlines that participants rated as accurate) versus purposeful sharing (indicated by the fraction of shared headlines that participants rated as inaccurate).

Concretely, we do the analysis as follows. First, we dichotomize responses, classifying sharing intentions of ‘extremely unlikely’, ‘moderately unlikely’, and ‘slightly unlikely’ as ‘unlikely to share’ and ‘slightly likely’, ‘moderately likely’, and ‘extremely likely’ as ‘likely to share’; and classifying accuracy ratings of ‘not at all accurate’ and ‘not very accurate’ as ‘not accurate’ and ‘somewhat accurate’ and ‘very accurate’ as ‘accurate’. Then we define the fraction of sharing of false content due to each factor as follows:

In which, Fcont denotes the fraction of false headlines shared in the control; Ftreat denotes the fraction of false headlines shared in the treatment group; Ntreat denotes the number of false headlines shared in the treatment group, \({N}_{{\rm{treat}}}^{{\rm{acc}}}\) denotes the number of false headlines shared and rated accurate in the treatment group, and \({N}_{{\rm{treat}}}^{{\rm{inacc}}}\) denotes the number of false headlines shared and rated inaccurate in the treatment group.

For an intuitive visualization of these expressions, see Fig. 2d.

To calculate confidence intervals on our estimates of the relative effect of inattention, confusion, and purposeful sharing, we use bootstrapping simulations. We create 10,000 bootstrap samples by sampling with replacement at the level of the subject. For each sample, we calculate the difference in fraction of sharing of false information explained by each of the three factors (that is, the three pairwise comparisons). We then determine a two-tailed P value for each comparison by doubling the fraction of samples in which the factor that explains less of the sharing in the actual data are found to explain more of the sharing.

Preregistration

Although we did complete a preregistration in connection with this experiment, we do not follow it here. The analyses we preregistered simply tested for an effect of the manipulation on sharing discernment, as in studies 3–5. After conducting the experiment, we realized that we could analyse the data in an alternative way to gain insight into the relevant effect of the three reasons for sharing misinformation described in this Article. It is these (post hoc) analyses that we focus on. Notably, Extended Data Table 2 shows that equivalent results are obtained when analysing the two samples separately (the first being a pilot for the pre-registered experiment, and the second being the pre-registered experiment), helping to address the post hoc nature of these analyses.

Study 7

In study 7, we set out to test whether the results of the survey experiments in studies 3–5 would generalize to real sharing decisions ‘in the wild’, and to misleading but not blatantly false news. Thus, we conducted a digital field experiment on Twitter in which we delivered the same intervention from the ‘treatment’ condition of the survey experiments to users who had previously shared links to unreliable news sites. We then examined the effect of receiving the intervention on the quality of the news that they subsequently shared. The experiment was approved by Yale University Committee of the Use of Human Subjects IRB protocol 2000022539 and MIT COUHES Protocol 1806393160. Although all analysis code is posted online, we did not publicly post the data owing to privacy concerns (even with de-identified data, it may be possible to identify many of the users in the dataset by matching their tweet histories with publicly available data from Twitter). Researchers interested in accessing the data are asked to contact the corresponding authors.

Study 7 is an aggregation of three different waves of data collection, the details of which are summarized in Extended Data Table 3. (These are all of the data that we collected, and the decision to conclude the data collection was made before running any of the analyses reported in this Article.)

Participants

The basic experimental design involved sending a private direct message to users asking them to rate the accuracy of a headline (as in the ‘treatment’ condition of the survey experiments). Twitter only allows direct messages to be sent from account X to account Y if account Y follows account X. Thus, our first task was to assemble a set of accounts with a substantial number of followers (who we could then send direct messages to). In particular, we needed followers who were likely to share misinformation. Our approach was as follows.

First, we created a list of tweets with links to one of two news sites that professional fact-checkers rated as extremely untrustworthy27 but that are nonetheless fairly popular: www.Breitbart.com and www.infowars.com. We identified these tweets by (i) retrieving the timeline of the Breitbart Twitter account using the Twitter REST API (Infowars had been banned from Twitter when we were conducting our experiment and thus had no Twitter account), and (ii) searching for tweets that contain a link to the corresponding domain using the Twitter advanced search feature and collecting the tweet IDs either manually (wave 1) or via scraping (waves 2 and 3). Next, we used the Twitter API to retrieve lists of users who retweeted each of those tweets (we periodically fetched the list of ‘retweeters’ because the Twitter API only provides the last 100 users ‘retweeters’ of a given tweet). As shown in Extended Data Table 3, across the three waves this process yielded a potential participant list of 136,379 total Twitter users with some history of retweeting links to misleading news sites.

Next, we created a series of accounts with innocuous names (for example, ‘CookingBot’); we created new accounts for each experimental wave. Each of the users in the potential participant list was then randomly assigned to be followed by one of our accounts. We relied on the tendency of Twitter users to reciprocally follow-back to create our set of followers. Indeed, 8.3% of the users that were followed by one of our accounts chose to follow our account back. This yielded a total of 11,364 followers across the three waves. (After the completion of our experiments, Twitter has made it substantially harder to follow large numbers of accounts without getting suspended, which creates a challenge for using this approach in future work; a solution is to use the targeted advertising on Twitter to target adverts whose goal is the accruing of followers as the set of users one would like to have in one’s subject pool.)

To determine eligibility and to allow blocked randomization, we then identified (i) users’ political ideology using the algorithm from Barberá et al.38; (ii) the probability of them being a bot, using the bot-or-not algorithm39; (iii) the number of tweets to one of the 60 websites with fact-checker ratings that will form our quality measure; and (iv) the average fact-checker rating (quality score) across those tweets.

For waves 1 and 2, we excluded users who tweeted no links to any of the 60 sites in our list in the two weeks before the experiment; who could not be given an ideology score; who could not be given a bot score; or who had a bot score above 0.5 (in wave 1, we also excluded a small number of very high-frequency tweeters for whom we were unable to retrieve all relevant tweets due to the 3,200-tweet limit of the Twitter API). In wave 3, we took a different approach to avoiding bots, namely avoiding high-frequency tweeters. Specifically, we excluded participants who tweeted more than 30 links to one of the 60 sites in our list in the two weeks before the experiment, as well as excluding those who tweeted fewer than 5 links to one of the 60 sites (to avoid lack of signal). This resulted in a total of 5,379 unique Twitter users across the three waves. (Note that these exclusions were applied ex ante, and excluded users were not included in the experiment, rather than implementing post hoc exclusions.)

One might be concerned about systematic differences between the users we included in our experiments versus those who we followed but did not follow us back. To gain some insight into this question, we compared the characteristics of the 5,379 users in our experiment to a random sample of 10,000 users that we followed but did follow us back (sampled proportional to the number of users in each wave). For each user we retrieved number of followers, number of accounts followed, number of favourites, and number of tweets. We also estimated political ideology as per Barberá et al.38, probability of being a bot39, and age and gender using based on profile pictures using the Face Plus Plus algorithm40,41,42. Finally, we checked whether the account had been suspended or deleted. As shown in Extended Data Fig. 5, relative to users who did not follow us back, the users that took part in our experiment followed more accounts, had more followers, selected more favourite tweets, were more conservative, were older, and were more likely to be bots (P < 0.001 for all); and were also more likely to have had their accounts suspended or deleted (P = 0.012). These observations suggest that to the extent that our recruitment process induced selection, it is in a direction that works against the effectiveness of our treatment: the users in our experiment are likely to be less receptive to the intervention than users more generally, and therefore our effect size is likely to be an underestimate of the effect we would have observed in the full sample.

Materials and procedure

The treatment in study 7 was very similar to the survey experiments. Users were sent a direct message asking them to rate the accuracy of a single non-political headline (Fig. 4b). An advantage of our design is that this direct message is coming from an account that the user has themselves opted in to following, rather than from a totally unknown account. Furthermore, the direct message begins by saying ‘Thanks for following me!’ and sending such thank-you direct messages is a common practice on Twitter. These factors should substantially mitigate any possibility of the users feeling suspicious or that they are being surveilled by our account, and instead make the direct message appear more a typical interaction on Twitter.

We did not expect users to respond to our message. Instead, our intervention was based on the idea that merely reading the opening line (‘How accurate is this headline?’) would make the concept of accuracy more salient. Because we could not reliably observe whether (or when) users read the message (because many users’ privacy settings prevent the sending of read-receipts), we performed intent-to-treat analyses that included all subjects and assumed that treatment began as soon as the message was sent. Furthermore, to avoid demand effects, users were not informed that the message was being sent as part of a research study, and the accounts from which we sent the messages had innocuous descriptions (such as ‘Cooking Bot’). Not informing users about the study was essential for ecological validity, and we felt that the scientific and practical benefits justified this approach given that the potential harm to participants was minimal, and the tweet data were all publicly available. See Supplementary Information section 4 for more discussion on the ethics of digital field experimentation.

Because of the rate limits of direct message imposed by Twitter, we could only send direct message to roughly 20 users per account per day. Thus, we conducted each wave in a series of 24-h blocks in which a small subset of users was sent a direct message each day. All tweets and retweets posted by all users in the experiment were collected on each day of the experiment. All links in these tweets were extracted (including expanding shortened URLs). The dataset was thus composed of the subset of these links that linked to one of 60 sites whose trustworthiness had been rated by professional fact-checkers in previous work27 (with the data entry for a given observation being the trust score of the linked site).

To allow for causal inference, we used a stepped-wedge (also called randomized roll-out) design in which users were randomly assigned to a treatment date. This allows us to analyse tweets made during each of the 24-h treatment blocks, comparing tweets from users who received the direct message at the start of a given block (‘treated’) to tweets from users who had not yet been sent a direct message (‘control). Because the treatment date is randomly assigned, it can be inferred that any systematic difference revealed by this comparison was caused by the treatment. (Wave 2 also included a subset of users who were randomly assigned to never receive the direct message.) To improve the precision of our estimate, random assignment to treatment date was approximately balanced across bot accounts in all waves, and across political ideology, number of tweets to rated sites in the two weeks before the experiment, and average quality of those tweets across treatment dates in waves 2 and 3.

Because our treatment was delivered via the Twitter API, we were vulnerable to unpredictable changes to, and unstated rules of, the API. These gave rise to several deviations from our planned procedure. On day 2 of wave 1, fewer than planned direct messages were sent as our accounts were blocked part way through the day; and no direct messages were sent on day 3 of wave 1 (hence, that day is not included in the experimental dataset). On day 2 of wave 2, Twitter disabled the direct message feature of the API for the day, so we were unable to send the direct messages in an automated fashion as planned. Instead, all 370 direct messages sent on that day were sent manually over the course of several hours (rather than simultaneously). On day 3 of wave 2, the API was once again functional, but partway through sending the direct messages, the credentials for our accounts were revoked and no further direct messages were sent. As a result, only 184 of the planned 369 direct messages were sent on that day. Furthermore, because we did not randomize the order of users across stratification blocks, the users on day 3 who were not sent a direct message were systematically different from those who were sent a direct message. (As discussed in detail below, we consider analyses that use an intent-to-treat approach for wave 2 day 3—treating the data as if all 369 direct messages had indeed been sent—as well as analyses that exclude the data from wave 2 day 3.)

Analysis plan

As the experimental design and the data were substantially more complex than the survey experiment studies and we lacked well-established models to follow, it was not straightforward to determine the optimal way to analyse the data in study 7. This is reflected, for example, in the fact that wave 1 was not preregistered, two different preregistrations were submitted for wave 2 (one before data collection and one following data collection but before analysing the data), and one preregistration was submitted for wave 3, and each of the preregistrations stipulated a different analysis plan. Moreover, after completing all three waves, we realized that all of the analyses proposed in the preregistrations do not actually yield valid causal inferences because of issues involving missing data (as discussed in more detail below in the ‘Dependent variable’ section). Therefore, instead of conducting a particular preregistered analysis, we consider the pattern of results across a range of reasonable analyses.

All analyses are conducted at the user–day level using linear regression with heteroscedasticity-robust standard errors clustered on user. All analyses include all users on a given day who have not yet received the direct message as well as users who received the direct message on that day (users who received the direct message more than 24 h before the given day are not included). All analyses use a post-treatment dummy (0 = user has not yet been sent a direct message, 1 = user received the direct message that day) as the key independent variable. We note that this is an intent-to-treat approach that assumes that all direct messages on a given day are sent at exactly the same time, and counts all tweets in the subsequent 24-h block as post-treatment. Thus, to the extent that technical issues caused tweets on a given day to be sent earlier or later than the specified time, this approach may underestimate the treatment effect.

The analyses we consider differ in the following ways: dependent variable, model specification, type of tweet considered, approach to handling randomization failure, and approach to determining statistical significance. We now discuss each of these dimensions in more detail.

1. Dependent variable

We consider three different ways of quantifying tweet quality. Across approaches, a key issue is how to deal with missing data. Specifically, on days when a given user does not tweet any links to rated sites, the quality of their tweeted links is undefined. The approach implied in our preregistrations was to simply omit missing user–days (or to conduct analyses at the level of the tweet). Because the treatment is expected to influence the probability of tweeting, however, omitting missing user–days has the potential to create selection and thus undermine causal inference (and tweet-level analyses are even more problematic). For example, if a user tweets as a result of being treated but would not have tweeted had they been in the control (or does not tweet as a result of treatment but would have tweeted have they been in the control), then omitting the missing user–days breaks the independence between treatment and potential outcomes ensured by random assignment. Given that only 47.0% of user-days contained at least one tweeted link to a rated site, such issues are potentially quite problematic. We therefore consider three approaches to tweet quality that avoid this missing data problem.

The first measure is the average relative quality score. This measure assigns each tweeted link a relative quality score by taking the previously described fact-checker trust rating27 (quality score, [0, 1], available for 60 news sites) of the domain being linked to, and subtracting the baseline quality score (the average quality score of all pre-treatment tweets across all users in all of the experimental days). Each user–day is then assigned an average relative quality score by averaging the relative quality score of all tweets made by the user in question on the day in question; and users who did not tweet on a given day are assigned an average relative quality score of 0 (thus avoiding the missing data problem). Importantly, this measure is quite conservative because the (roughly half of) post-treatment user–days in which data are missing are scored as ‘0’. Thus, this measure assumes that the treatment had no effect on users who did not tweet on the treatment day. If, instead, non-tweeting users would have shown the same effect had they actually tweeted, the estimated effect size would be roughly twice as large as what we observed here. We note that this measure is equivalent to using average quality scores (rather than relative quality score) and imputing the baseline quality score to fill missing data (so assuming that on missing days, the user’s behaviour matches the pre-treatment average).