I feel fortunate to have witnessed two total solar eclipses in my lifetime. The first was at Center Hill Lake in central Tennessee in 2017, then this year’s (April 8) eclipse from Paducah, Kentucky. Given my age (68), I doubt I will see another.

For those who have not witnessed one, many look at the resulting photos and say, “So what?”. When I look at most of the photos (including the ones I’ve taken) I can tell you that those photos do not fully reflect the visual experience. More on that in a minute.

Having daytime transition into night in a matter of seconds is one part of the experience, with the sounds of nature swiftly changing as birds and frogs suddenly realize, “Night sure came quickly today!”

It’s also cool to hear people around you respond to what they are witnessing. The air temperature becomes noticeably cooler. Scattered low clouds that might have threatened to get in the way mostly disappear, just as they do after sunset.

But why are so many photos of the event… well… underwhelming? After thinking about this over the past week, I believe the answer lies in the extreme range of brightness a solar eclipse produces that cameras (even good ones) have difficulty capturing. This is why individual photos you see will often look different from one another. Depending upon camera exposure settings, you will see different features.

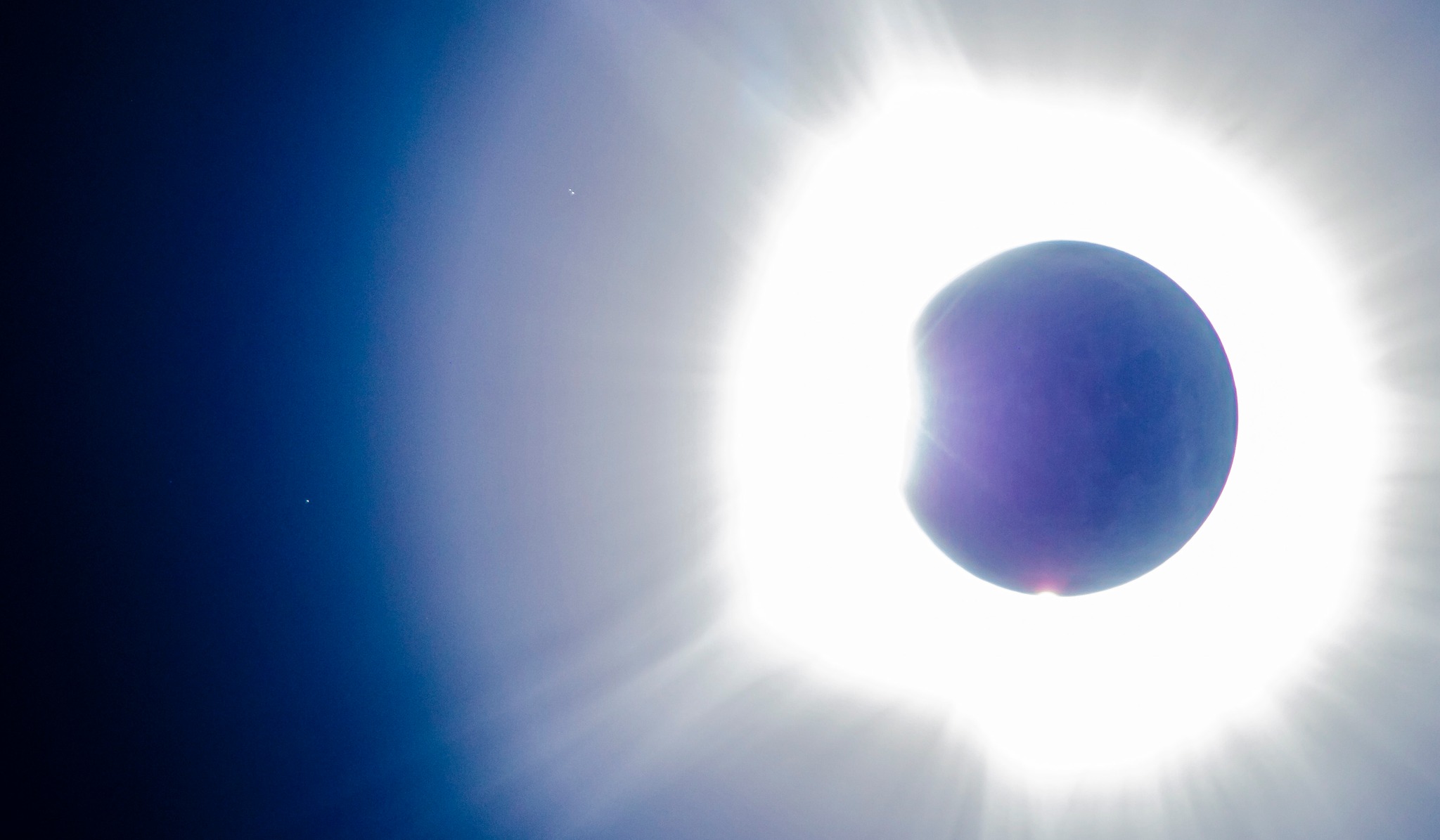

This was made very apparent to me during this year’s eclipse. Due to terrible eclipse traffic, we had to stop short of our intended destination, and I had only 10 minutes to set up a telescope and two cameras, so some of my advance planning went out the window. I was watching the “diamond” of the diamond ring phase of totality, as the last little bit of direct sunlight disappears behind the moon. At that point, it is (in my opinion) possible with the naked eye to perceive a dynamic range greater than any other scene in nature: from direct sunlight of the tiny “diamond” to the adjacent night sky with stars. I took the following photo with a Canon 6D MkII camera with 560 mm of stacked Canon lenses, which (barely) shows this extreme range of brightness.

In order to pull out the faint Earthshine on the moon’s dark side in this photo, and the stars to the left and upper-left, I had to stretch this exposure by quite a lot.

From what I have read (and experienced) the human eye/brain combination can perceive a greater dynamic range of brightness than a camera can. This is why photographers have to fool so much with camera settings to capture what their eyes see. In this case, I perceived the “diamond” of direct sunlight was (of course) blindingly bright, while the sun’s corona extending 2 to 3 solar diameters away from the sun was much less bright (in fact, the solar corona is not even as bright as a full moon). But in this single photo, both the diamond and the corona were basically at the maximum brightness the camera could capture at this exposure setting (0.5 sec, ISO400, f/5.6), even though visually they had very different brightnesses.

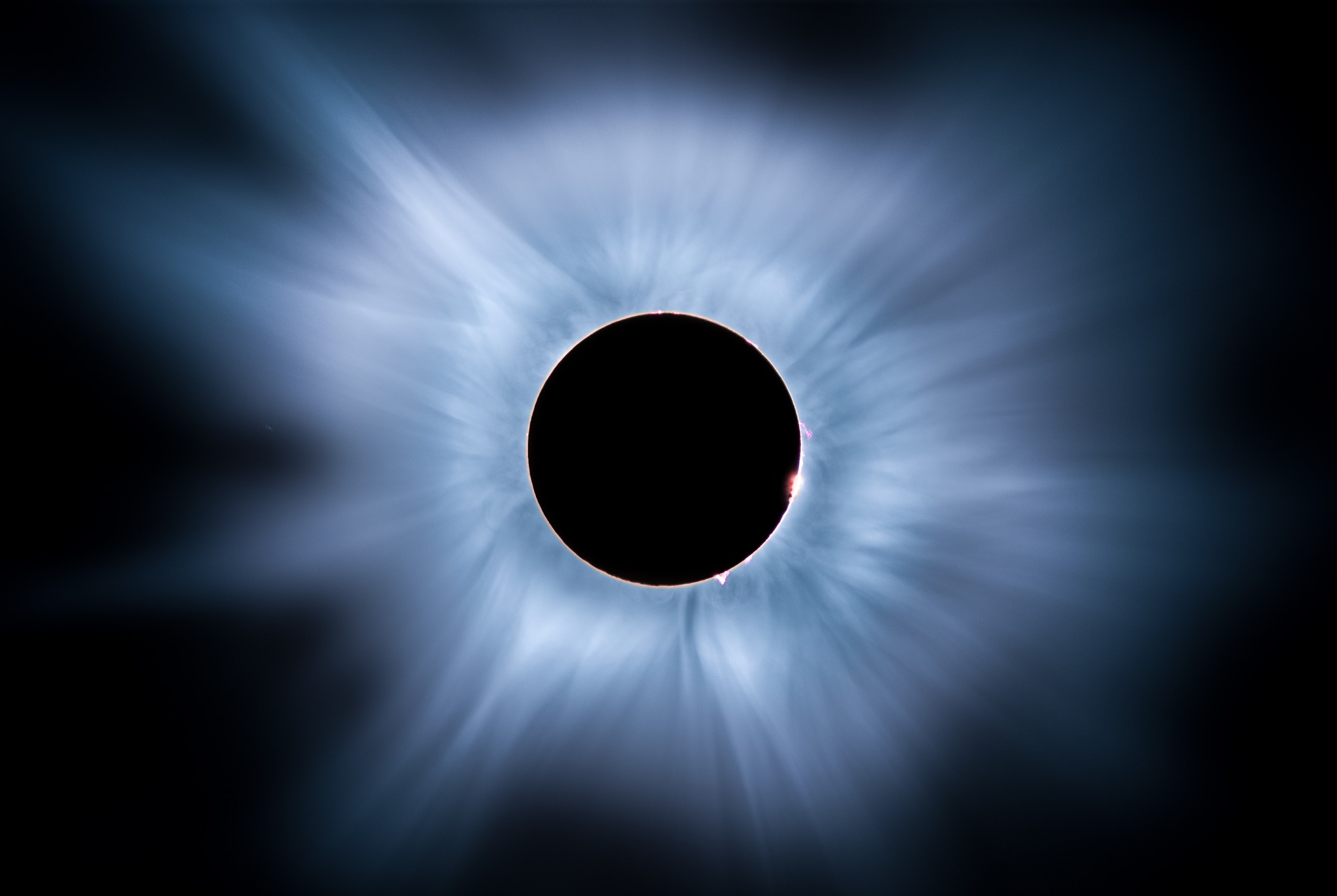

Many of the better photos you will find are composites of multiple photos taken over a very wide range of camera settings, which more closely approximate what the eye sees. I found this one that seems closer to what I witnessed (photo by Mark Goodman):

So, if you have never experienced a total solar eclipse, and are underwhelmed by the photos you see, I submit that the actual experience is much more dramatic than the photos indicate.

Here’s some unedited real-time video I took with my Sony A7SII camera mounted on a Skywatcher Esprit ED80 refractor telescope. We were in a Pilot Travel Center parking lot with about a dozen other cars that also didn’t make it o their destinations due to the traffic. I used a solar filter until just before totality, then removed the filter. The camera is on an automatic exposure setting. I’ve done no color grading of the video. Skip ahead to the 3 minute mark to catch the transition to totality:

Home/Blog

Home/Blog