Peer Reviewed

Misinformation more likely to use non-specific authority references: Twitter analysis of two COVID-19 myths

Article Metrics

3

CrossRef Citations

Altmetric Score

PDF Downloads

Page Views

This research examines the content, timing, and spread of COVID-19 misinformation and subsequent debunking efforts for two COVID-19 myths. COVID-19 misinformation tweets included more non-specific authority references (e.g., “Taiwanese experts”, “a doctor friend”), while debunking tweets included more specific and verifiable authority references (e.g., the CDC, the World Health Organization, Snopes). Findings illustrate a delayed debunking response to COVID-19 misinformation, as it took seven days for debunking tweets to match the quantity of misinformation tweets. The use of non-specific authority references in tweets was associated with decreased tweet engagement, suggesting the importance of citing specific sources when refuting health misinformation.

Research Questions

- How did COVID-19 misinformation tweets and debunking tweets differ in their use of authority references?

- How long did it take COVID-19 debunking tweets to match the quantity of COVID-19 misinformation tweets?

- How did the use of authority references relate to the spread of COVID-19 misinformation?

Essay Summary

- Twitter posts related to COVID-19 misinformation were collected, coded, and analyzed.

- Misinformation tweets received a head start that provided early momentum. It took seven days for debunking tweets to equal the quantity of misinformation tweets, a delay that provided opportunity for misinformation to spread on social networks. After the inflection point at seven days, the number of debunking tweets accelerated and outpaced misinformation.

- The use of authority references in COVID-19 tweets related to user engagement. Tweets that included references to non-specific authority sources were less likely to receive likes or retweets from other users.

- Misinformation tweets were more likely to reference non-specific authority sources (e.g., “Taiwanese researchers”, “top medical officials”). Debunking tweets were more likely to reference specific or verifiable authority sources (e.g., the CDC, the World Health Organization, a Snopes article debunking the claim).

- This research suggests references to non-specific authority sources should present a warning sign to suspect misinformation. If a recommendation is attributed to a general non-specific authority source, users should heighten their suspicion for misinformation.

Implications

As coronavirus infections surged globally, people turned to social media to learn how to protect themselves from the emergent threat (Volkin, 2020). Social media is an important tool for communicating information during times of crisis (Austin et al., 2012; Houston et al., 2014). However, misinformation for COVID-19 emulated the trail of the virus and spread person-to-person through online social networks (Frenkel et al., 2020). Misinformation related to COVID-19 included misleading, false, and manipulated content (Brennen et al., 2020). Misleading information exacerbates health problems and increases the workload of health organizations who must exert resources to squashing the false claims (van der Meer & Jin, 2020).

The COVID-19 “infodemic” created a multi-layered problem for risk communication (Krause et al., 2020). In addition to the health risk of contracting COVID-19, searching online for information became a risk in itself through the possibility of encountering false claims (Krause et al., 2020). Twitter users often fail to detect rumors (Wang & Zhuang, 2018). Misinformation for infectious diseases has focused primarily on issues related to transmission, prevention, treatment, and vaccination (Nsoesie & Oladeji, 2020). The false claims in misinformation can produce negative health outcomes and lead people to take harmful actions in search of a remedy, such as gargling bleach in attempt to eliminate a virus.

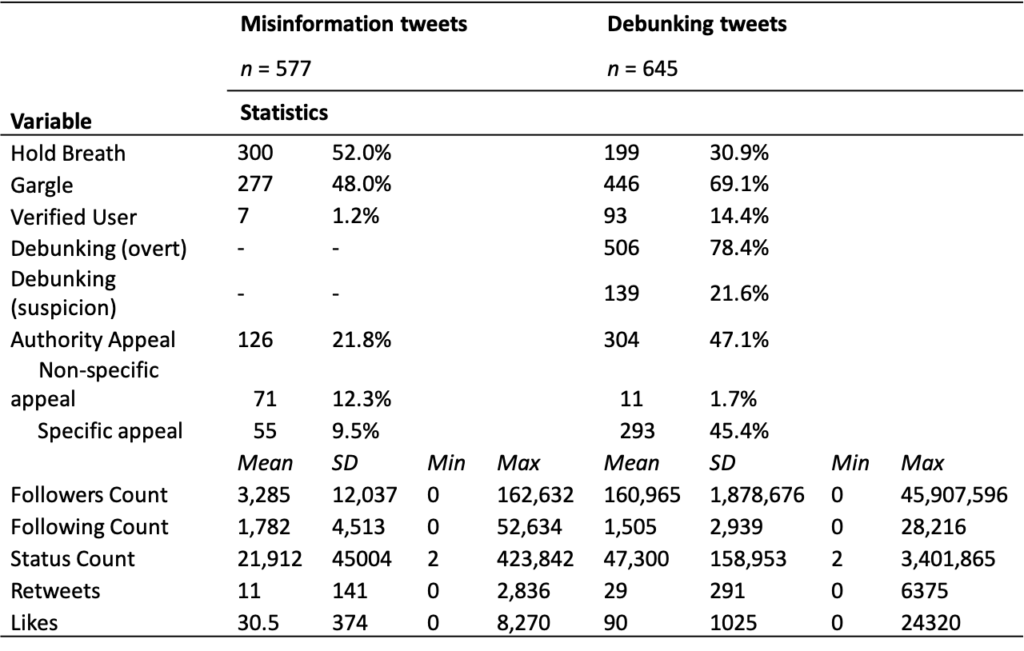

Misinformation tweets and debunking tweets differed in their use of authority references. Misinformation tweets were more likely to attribute information to non-verifiable authority sources, such as “Taiwanese researchers” or “health experts.” Debunking efforts included more specific and verifiable authority appeals, such as providing a link to a fact-checking article from Snopes, or attributing the information to a specific organization, such as the CDC. In addition, debunking tweets were more likely to come from verified users. In our sample, 93 of 645 (14.4%) debunking tweets came from verified users, compared to seven of 577 (1.2%) misinformation tweets, indicating verified users were mostly not responsible for posting the misinformation. However, verified users often have larger follower counts and may create greater total impressions, or tweet views, due to the size of their following (Wang & Zhuang, 2017). During crisis events, users interact most frequently with verified users (Hunt et al., 2020). Future research should examine specifically the relationship between verified users, tweet timing, and total impressions during surging misinformation events.

Our results indicate that references to non-specific authority sources should act as a signal to increase one’s guard against potential misinformation attempts. Users should view general attributions to authority references as a guideline for raising their suspicion of the accuracy of the tweet, as misinformation tweets were more likely to include ambiguous, non-specific cues to authority references (e.g., “medical professionals”, “a nurse friend”). Intentional deliberation can reduce misinformation spread (Bago et al., 2020). To contrast these findings, research should examine the prevalence of non-specific and specific authority appeals in different health contexts. Current results suggest the appearance of an ambiguous authority appeal in health messages should lead people to pause and deliberate on the accuracy of the claim.

The use of authority appeals in COVID-19 tweets related to user engagement. Misinformation tweets with non-specific authority references were less likely to receive engagement from other users in the form of likes or retweets. The inclusion of specific authority references did not increase or decrease the likelihood of tweet engagement by other users. Future work can advance these findings by examining categories of abstract and specific authority appeals and their effect on tweet engagement. Likes on social media influence credibility perceptions of health information (Borah & Xiao, 2018), and credibility can affect intentions to adopt healthy behaviors (Hu & Sundar, 2010). The inclusion of authority appeals may also influence credibility judgments of misinformation and debunking tweets. Source credibility is paramount when attempting to correct inaccurate health information (Bode & Vraga, 2018).

Debunking represents an important public strategy to reduce the persistence of misinformation (Chan et al., 2017). For debunking to be necessary, the misinformation must first occur. However, findings indicate that debunking tweets lagged behind misinformation by several days before accelerating in frequency. The delay between misinformation emergence and debunking response provided an opportunity for the misinformation to spread. The observed delay in debunking response is problematic because even minimally repetitive exposure to fake news can increase the perceived accuracy of the information (Pennycook et al., 2018). Once people believe misinformation, they tend to do so with strong conviction and correcting the information becomes more difficult (Lewandowsky et al., 2012).

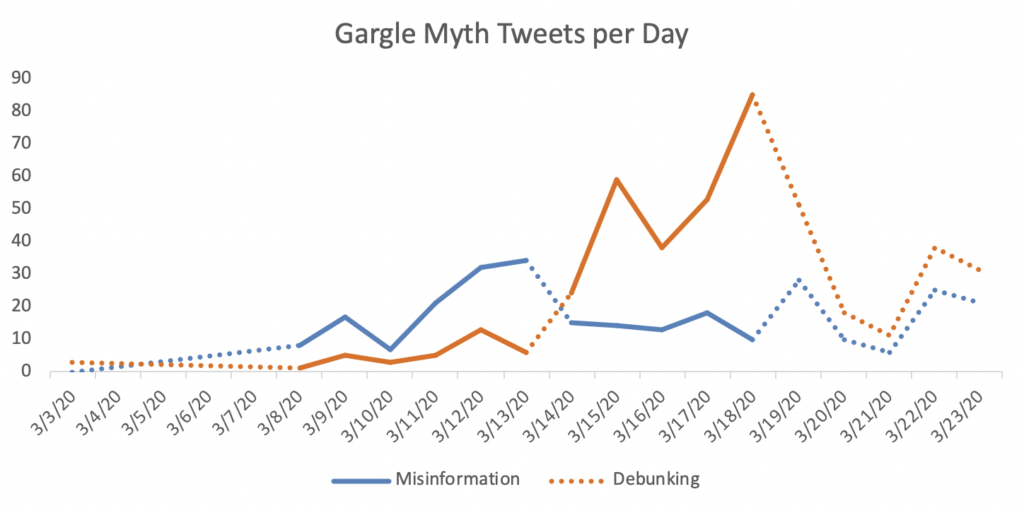

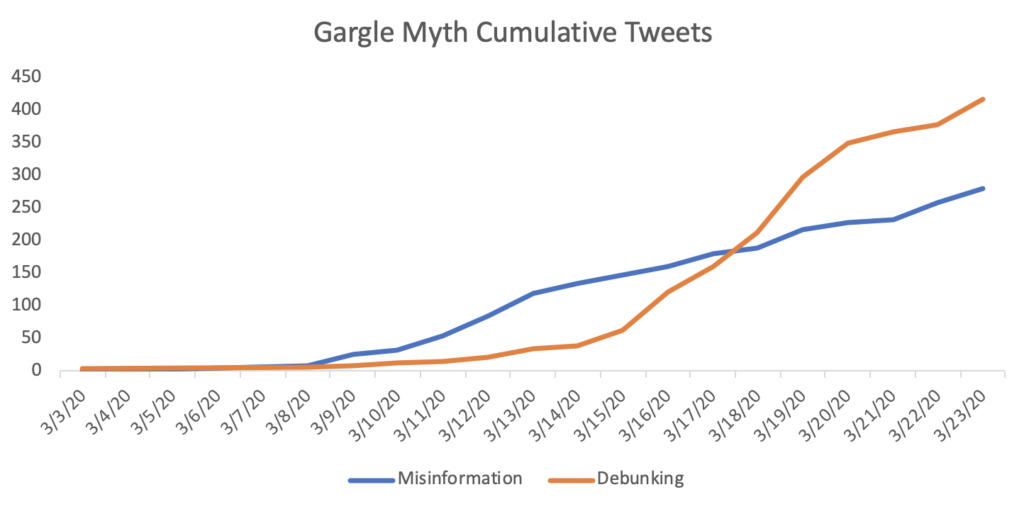

Improving the efficacy of debunking efforts remains a priority (van der Meer & Jin, 2020). The current results indicate avoiding non-specific authority references is a good first step for debunking efforts, as non-specific authority references had a negative relationship with tweet engagement. In our sample, only 1.7% of debunking tweets included a non-specific authority appeal. Twitter users frequently provide website links to debunk false rumors (Hunt et al., 2020). Debunking efforts should focus on including links and appeals to specific authority references. Of note, the quantity of debunking efforts differed between the two myths analyzed. Specifically, users focused greater attention to debunking the gargle myth than the hold breath myth, perhaps because gargling liquids such as bleach presents a greater immediate threat to people’s health. This result suggests the perceived danger of health misinformation may affect likelihood of debunking response. When perceived danger of the misinformation is high, people may be more motivated to debunk the myth.

Proactive communication is vital for the successful reduction of COVID-19 misinformation (Jamieson & Albarracín, 2020). Prompting users to ask why they believe the information to be true can be an effective gatekeeping device to reduce sharing of false claims (Fazio, 2020). However, misinformation is often blended with accurate information, making it difficult for users to discern myth from fact. For example, the claim that people should gargle a liquid to avoid COVID-19 was frequently embedded with other health recommendations, such as getting adequate sleep or exercise. Understanding the effects of messages with complete misinformation versus partial misinformation remains a priority for future research.

Users and organizations must keep in mind that misinformation campaigns related to COVID-19 are far from over. Rather, organizations should anticipate and expect misinformation attempts in regard to future COVID-19 issues such as vaccine development, testing, and treatment. A faster response time for debunking efforts will be critical for halting future misinformation efforts before they build momentum. Organizations should treat misinformation campaigns as a known threat, fully expecting for misinformation to spread during a crisis rather than being surprised by the surplus of myths and false claims. Anticipating the existence of misinformation attempts may hasten the efficiency and strengthen the efficacy of future debunking efforts.

Findings

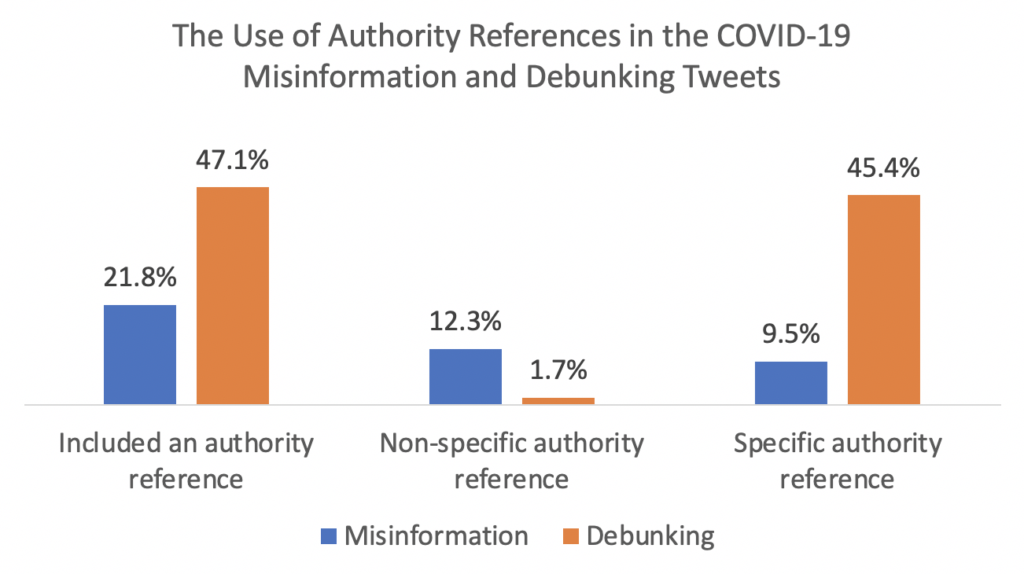

Finding 1: Misinformation and debunking tweets included two types of authority appeals: non-specific authority references and specific authority references. Misinformation tweets included more appeals to non-specific authority references. Debunking tweets included more appeals to specific authority references.

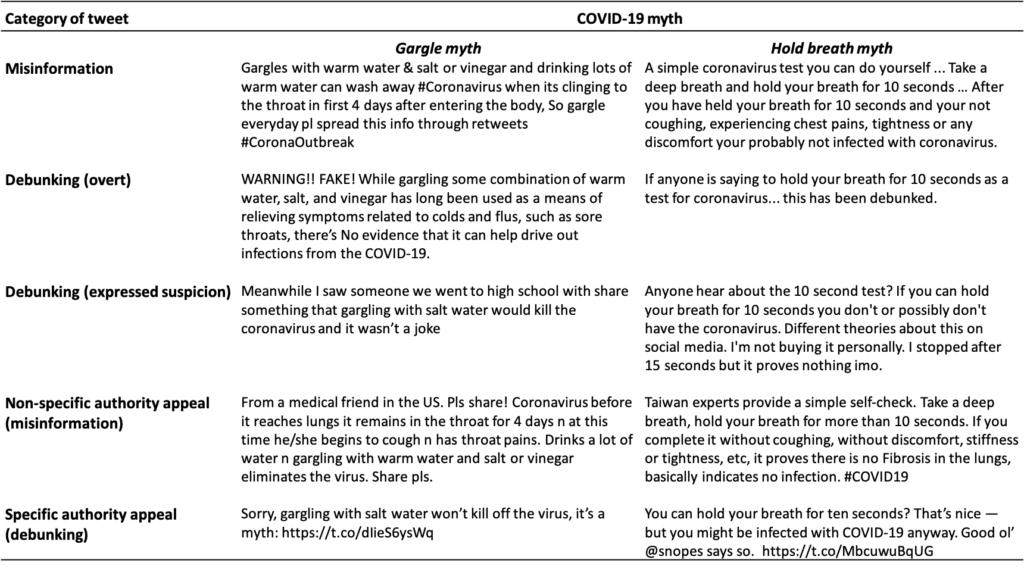

Misinformation tweets and debunking tweets differed in their use of authority appeals. Two types of authority references appeared in the tweets, including appeals to non-specific authority references and appeals to specific authority references. For example, tweets could include a non-specific authority reference (e.g., “Taiwanese experts”, “top medical officials”) or a specific authority reference (e.g., the CDC, Factcheck.org). Misinformation tweets were significantly more likely than debunking tweets to include a non-specific authority reference (12.3% misinformation tweets vs. 1.7% debunking tweets). Debunking tweets were significantly more likely than misinformation tweets to include appeals to specific authority references (45.4% of debunking tweets vs. 9.5% of misinformation tweets), 2(2, n = 1222) = 218.84, p < .001).

Misinformation tweets and debunking tweets also differed in how frequently they included an authority reference. Overall, debunking tweets were more likely to include an authority reference than misinformation tweets. Misinformation tweets used an authority appeal in 126 of 577 tweets (21.8%). Debunking tweets included an authority appeal in 304 of 645 tweets (47.1%), which is significantly more often (2[1, n = 1222] = 85.44, p < .001).

Finding 2: Debunking tweets lagged behind misinformation tweets before accelerating in frequency. Seven days passed before debunking tweets matched the quantity of misinformation tweets.

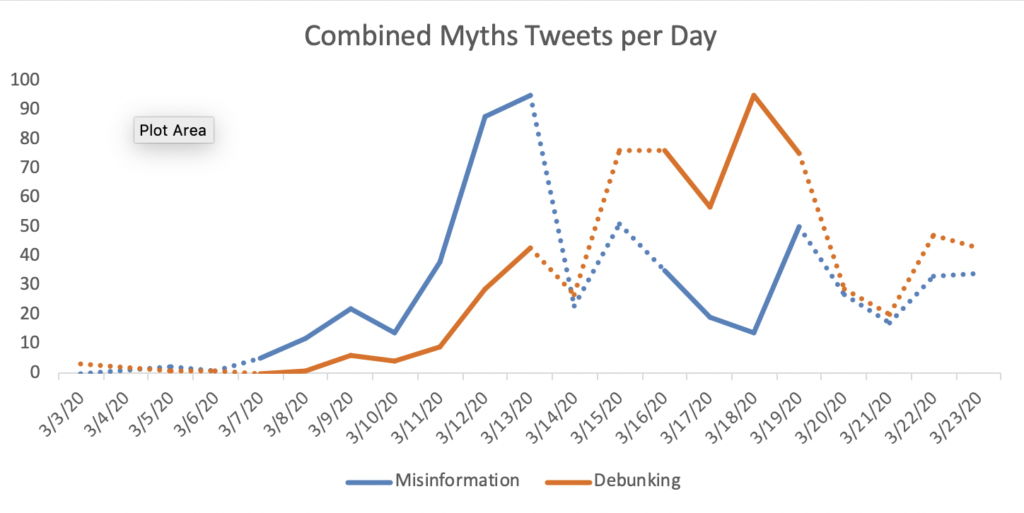

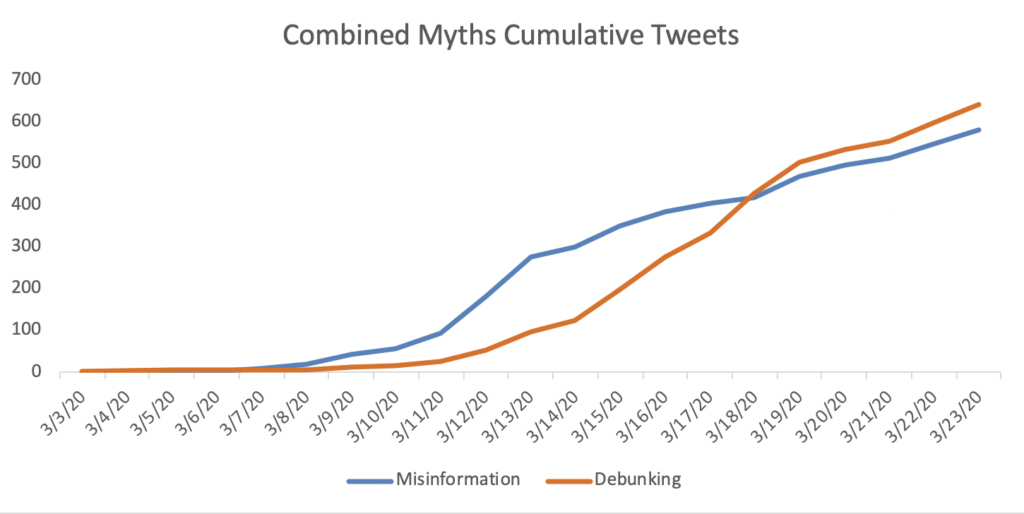

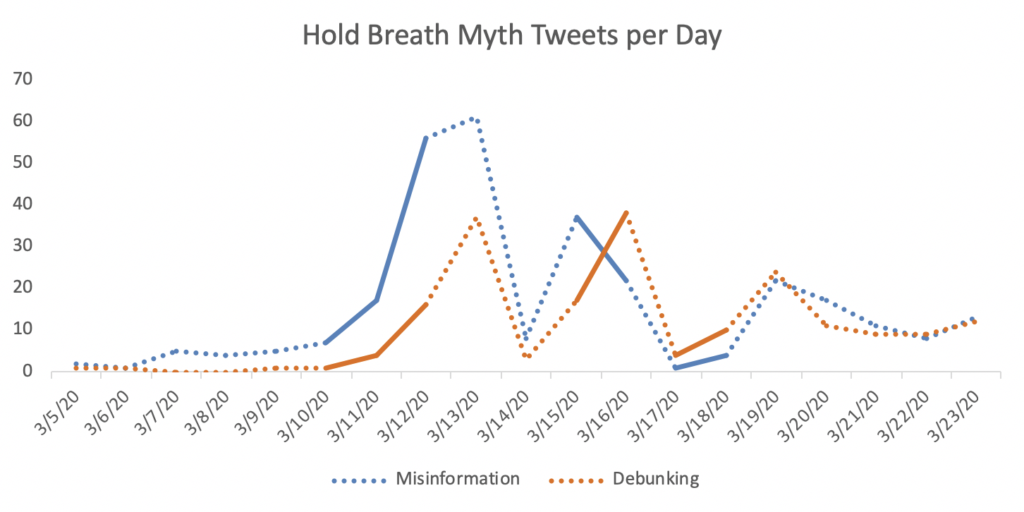

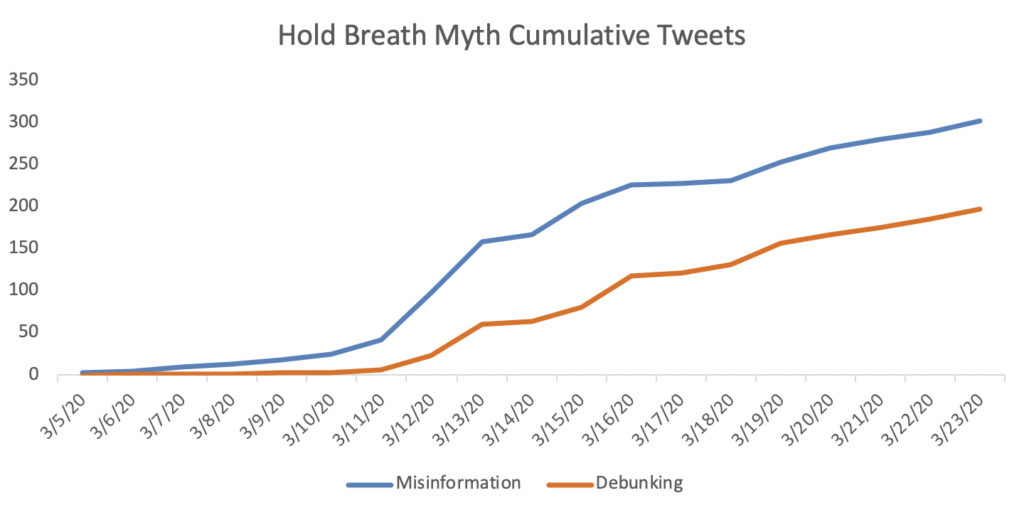

Examining the two myths combined, the results indicate it took seven days for debunking tweets to match the quantity of COVID-19 misinformation tweets. Misinformation for COVID-19 began picking up speed on 3/7/20, while debunking tweets did not match the total frequency of misinformation tweets until seven days later on 3/14/20. After the inflection point, debunking tweets accelerated and reached similar levels to the peak of COVID-19 misinformation tweets. In our sample, peak levels of debunking trailed the misinformation peak by five days. After that point, misinformation and debunking tweets receded to similar levels. A cumulative analysis of misinformation and debunking for the two myths shows an acceleration of total misinformation on 3/11/20 and a gradual rise in debunking thereafter, with an increase in aggregate debunking between 3/13/20 and 3/19/20.

Examining the two myths separately, both cases illustrate a sharp initial ramp of misinformation, followed by debunking responses that trailed the misinformation attempts. Notably, despite our data including similar amounts of misinformation tweets for both the “hold breath” and “gargle” myths, debunking tweets focused greater effort on refuting the gargle myth. The cumulative analysis for the hold breath myth indicates total debunking efforts did not overtake misinformation for the hold breath myth at any point. In comparison, debunking tweets for the gargle myth rose sharply on 3/14/20 and exceeded the cumulative misinformation total for the gargle myth on 3/18/20. Figures two through seven illustrate the relative frequency of misinformation tweets and debunking tweets over time, from both a per day and a cumulative perspective.

Finding 3: The inclusion of non-specific authority references in tweets was associated with decreased user engagement. Tweets that included non-specific authority references were less likely to receive likes or retweets from other users.

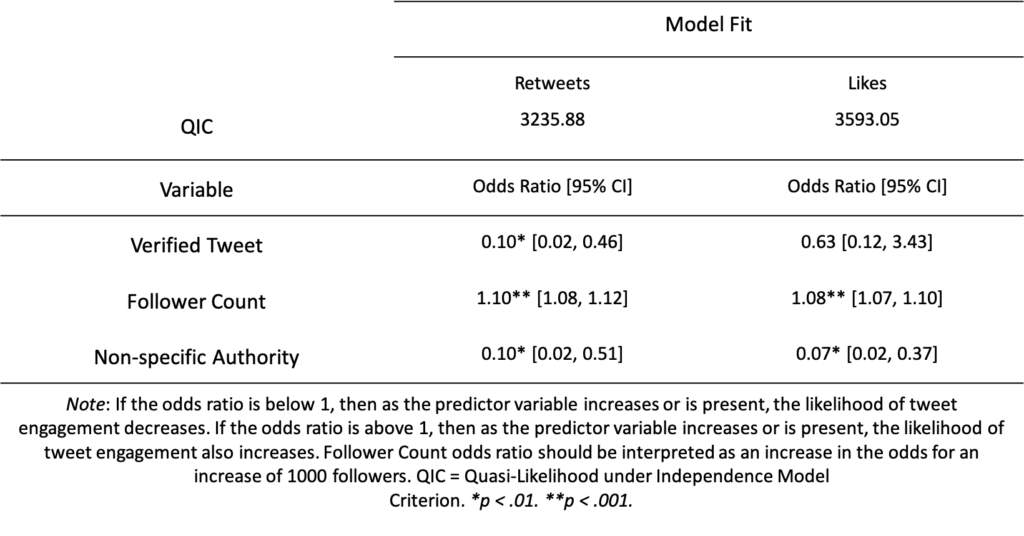

The use of non-specific authority references in COVID-19 tweets was related to decreased tweet engagement. Users were less likely to engage with tweets that included non-specific authority references, indicating that references to ambiguous authority cues may have negative effects on user interaction. This effect was driven by misinformation tweets, as the effect was not present when testing debunking tweets separately. Although non-specific authority references had a negative relationship with tweet engagement, including a specific authority appeal did not increase or decrease the likelihood of engagement. Table 2 provides the model statistics and odds ratios for the likelihood of tweet engagement for the misinformation tweets in the form of retweets and likes.

Methods

Data collection

Tweets related to two false COVID-19 pieces of advice (henceforth referred to as myths) were collected via Twitter’s search API. A total of 1,493 tweets were collected for this study. The two COVID-19 myths were selected among several false claims reported by factchecking organizations (e.g., Snopes.com, Factcheck.org). The myths were selected because they were the least ambiguous (i.e., all or most of its elements were agreed upon by experts as clearly false) and the myths were findable on Twitter with a keyword-based query. The first myth claimed that gargling a liquid (e.g., warm water, saltwater, vinegar, mouthwash, bleach) would kill the coronavirus when the liquid entered the throat. The second myth claimed that a person could self-diagnose COVID-19 infection by holding their breath for a certain period of time. Typically, the claim was to hold your breath for 10 seconds, but the length of time varied slightly across tweets.

Once the two misinformation claims for the analysis were selected, we created search queries for each myth. To collect the tweets, we used the premium 30-day sandbox (free) search API. This specific API allowed us to collect tweets within the previous 30 days, which permitted reaching back to March 3, 2020. At the time of data collection, we were limited to collecting 7,000 historical tweets. Therefore, we restricted the search parameters to a maximum of 200 tweets per day. The search API returned both retweets and quote tweets, which provided the ability to collect the original tweets shared by users. Retweets were removed from the final sample, but the original tweet was retained. For quote tweets, we preserved both the user-entered text and the text from the original tweet. These criteria resulted in the total of 1,493 collected tweets. Next we used the Python package Tweet Parser, provided by Twitter, to extract the necessary elements of each tweet.

Content analysis

After collecting the tweets, we engaged in content analysis. In the first round of coding, each individual tweet was labeled misinformation, debunking, or irrelevant. A misinformation tweet was defined as a message that stated the myth, urged others to adopt the myth’s recommendation, or otherwise made no attempt at disconfirming or denying the myth. If a tweet indicated skepticism of the claim or disbelief of the myth, the tweet was labeled debunking. Debunking tweets were defined as messages designed to correct or refute misinformation. Debunking tweets frequently referenced media articles to refute the claim (e.g., Snopes, Factcheck.org), or otherwise addressed the inaccuracy of the myth (e.g., “Fact check: Holding your breath for 10 seconds is not a coronavirus test”, “Gargling vinegar will not protect you from COVID-19”). Within the label of debunking, for descriptive purposes, we then specified if the debunking tweet represented overt debunking or merely expressed suspicion of the myth. The two types of debunking tweets were combined for the current analysis.

Throughout this analysis, we use the terms authority appeal and authority reference interchangeably. To identify the inclusion of authority appeals in the COVID-19 tweets, we conducted closed coding of each individual tweet, identifying the presence or absence of authority appeals. An authority appeal was defined as referencing an individual or organization that has knowledge about COVID-19. Although some individuals or organizations referenced may not be universally considered an authority on a viral outbreak (e.g., political leaders), we decided to code such references as an authority appeal because the authority referenced may have influence on their supporters. Examples of authorities referenced in the tweets included emergency room doctors, nurses, research centers, empirical studies, news outlets, political leaders, and universities. If a tweet attributed the information to such an authority, we coded the tweet as including an authority appeal.

The manner in which authority appeals were referenced varied across tweets. At times the authority references reflected direct statements, such as, “According to medical experts at Stanford, you can self-diagnose COVID-19 by holding your breath for 10 seconds.” Other tweets included an article link to a specific authority reference. In that case, the news outlet associated with the article acted as the authority appeal (e.g., Snopes, BBC, World Health Organization). We did not consider links to blogs to be an authority appeal. For certain tweets, images were included in the original post, which we also examined. If the tweet included an image of a medical professional or the logo of an organization, we coded the tweet as including an authority appeal.

In addition to identifying the presence or absence of authority appeals in the tweets, we coded each appeal as either a specific authority appeal or a non-specific authority appeal. A specific authority appeal was defined as a message that included a name or a verifiable authority, such as Factcheck.org or the CDC. Non-specific authority appeals included messages that did not identify a specific person or organization, relying instead on ambiguous authority references. Examples of non-specific authority references included “Japanese doctors” and “advice from a nurse friend.”

Finally, we removed any irrelevant tweets from the sample. Irrelevant tweets included terms from the search query but were unrelated to the myth. For example, some tweets described general tips about breathing exercises to boost immunity against COVID-19, but were not claiming that the breathing exercises were a self-diagnosis tool. Tweets asking an open-ended question of whether or not the myth was accurate were marked irrelevant, as they were not clearly misinformation or debunking.

Topics

- COVID-19

- / Debunking

- / Social Media

Bibliography

Austin, L., Fisher, B., & Jin, Y. (2012). How audiences seek out crisis information: Exploring the social-mediated crisis communication model. Journal of Applied Communication Research, 40(2), 188-207. https://doi.org/10.1080/00909882.2012.654498

Bago, B., Rand, D. G., & Pennycook, G. (2020). Fake news, fast and slow: Deliberation reduces belief in false (but not true) news headlines. Journal of Experimental Psychology: General, 149(8), 1608-1613. https://doi.org/10.1037/xge0000729

Bode, L., & Vraga, E. K. (2018). See something, say something: Correction of global health misinformation on social media. Health Communication, 33(9), 1131-1140. https://doi.org/10.1080/10410236.2017.1331312

Borah, P., & Xiao, X. (2018). The importance of ‘likes’: The interplay of message framing, source, and social endorsement on credibility perceptions of health information on Facebook. Journal of Health Communication, 23(4), 399-411. https://doi.org/10.1080/10810730.2018.1455770

Chan, M. S., Jones, C. R., Jamieson, K. H., & Albarracín, D. (2017). Debunking: A meta-analysis of the psychological efficacy of messages countering misinformation. Psychological Science, 28(11), 1531-1546. https://doi.org/10.1177/0956797617714579

Fazio, L. K. (2020). Pausing to consider why a headline is true or false can help reduce the sharing of false news. Harvard Kennedy School (HKS) Misinformation Review, 1(2), 1-8. https://doi.org/10.37016/mr-2020-009

Freelon, D. (2010). ReCal: Intercoder reliability calculation as a web service. International Journal of Internet Science, 5, 20-33.

Frenkel, S., Alba, D. & Zhong, R. (2020, March 8). Surge of virus misinformation stumps Facebook and Twitter. The New York Times. https://www.nytimes.com/2020/03/08/technology/coronavirus-misinformation-social-media.html

Hayes, A. F., & Krippendorff, K. (2007). Answering the call for a standard reliability measure for coding data. Communication Methods and Measures, 1(1), 77-89. https://doi.org/10.1080/19312450709336664

Houston, J. B., Hawthorne, J., Perreault, M. F., Park, E. H., Goldstein Hode, M., Halliwell, M. R., Turner McGowen, S. E., Davis, R., Vaid, S., McElderry, J. A., & Griffith, S. A. (2014). Social media and disasters: A functional framework for social media use in disaster planning, response, and research. Disasters, 39(1), 1-22. https://doi.org/10.1111/disa.12092

Hu, Y., & Sundar, S. S. (2010). Effects of online health sources on credibility and behavioral intentions. Communication Research, 37(1), 105-132. https://doi.org/10.1177/0093650209351512

Hunt, K., Wang, B., & Zhuang, J. (2020). Misinformation debunking and cross-platform information sharing through Twitter during Hurricanes Harvey and Irma: A case study on shelters and ID checks. Natural Hazards, 103, 861-883. https://doi.org/10.1007/s11069-020-04016-6

Jamieson, K. H., & Albarracín, A. (2020). The relation between media consumption and misinformation at the outset of the SARS-CoV-2 pandemic in the US. Harvard Kennedy School (HKS) Misinformation Review, 1(2). https://doi.org/10.37016/mr-2020-012

Krause, N. M., Freiling, I., Beets, B., & Brossard, D. (2020). Fact-checking as risk communication: The multi-layered risk of misinformation in times of COVID-19. Journal of Risk Research. https://doi.org/10.1080/13669877.2020.1756385

Krippendorff, K. (2004). Reliability in content analysis: Some common misconceptions and recommendations. Human Communication Research, 30(3), 411-433. https://doi.org/10.1111/j.1468-2958.2004.tb00738.x

Lewandowsky, S., Ecker, U. K. H., Seifert, C. M., Schwarz, N., & Cook, J. (2012). Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest, 13(3), 106-131. https://doi.org/10.1177/1529100612451018

Makhortykh, M., Urman, A., & Ulloa, R. (2020). How search engines disseminate information about COVID-19 and why they should do better. Harvard Kennedy School (HKS) Misinformation Review, 1(3), 1-11. https://doi.org/10.37016/mr-2020-017

Nsoesie, E. O., & Oladeji, O. (2020). Identifying patterns to prevent the spread of misinformation during epidemics. Harvard Kennedy School (HKS) Misinformation Review, 1(3) 1-6. https://doi.org/10.37016/mr-2020-014

Pennycook, G., Cannon, T. D., & Rand, D. G. (2018). Prior exposure increases perceived accuracy of fake news. Journal of Experimental Psychology: General, 147(12), 1865-1880. https://doi.org/10.1037/xge0000465

van der Meer, T. G. L. A., & Jin, Y. (2020). Seeking formula for misinformation treatment in public health crises: The effects of corrective information type and source. Health Communication, 35(5), 560-575. https://doi.org/10.1080/10410236.2019.1573295

Volkin, S. (2020, March 27). Social media fuels spread of COVID-19 information-and misinformation. Johns Hopkins University. https://hub.jhu.edu/2020/03/27/mark-dredze-social-media-misinformation/

Wang, B., & Zhuang, J. (2017). Crisis information distribution on Twitter: A content analysis of tweets during Hurricane Sandy. Natural Hazards, 89, 161-181. https://doi.org/10.1007/s11069-017-2960-x

Wang, B., & Zhuang, J. (2018). Rumor response, debunking response, and decision makings of misinformed Twitter users during disasters. Natural Hazards, 93, 1145-1162. https://doi.org/10.1007/s11069-018-3344-6

Funding

The authors report no receipt of funding associated with this research.

Competing Interests

The authors declare no potential conflicts of interests with respect to the research, authorship, or publication of this article.

Ethics

The data for the project were obtained from publicly available sources and thus were exempt from IRB review.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/GSFFFP