Abstract

Effort-related decision-making and reward learning are both dopamine-dependent, but preclinical research suggests they depend on different dopamine signaling dynamics. Therefore, the same dose of a dopaminergic medication could have differential effects on effort for reward vs. reward learning. However, no study has tested how effort and reward learning respond to the same dopaminergic medication within subjects. The current study aimed to test the effect of therapeutic doses of d-amphetamine on effort for reward and reward learning in the same healthy volunteers. Participants (n = 30) completed the Effort Expenditure for Reward Task (EEfRT) measure of effort-related decision-making, and the Probabilistic Reward Task (PRT) measure of reward learning, under placebo and two doses of d-amphetamine (10 mg, and 20 mg). Secondarily, we examined whether the individual characteristics of baseline working memory and willingness to exert effort for reward moderated the effects of d-amphetamine. d-Amphetamine increased willingness to exert effort, particularly at low to intermediate expected values of reward. Computational modeling analyses suggested this was due to decreased effort discounting rather than probability discounting or decision consistency. Both baseline effort and working memory emerged as moderators of this effect, such that d-amphetamine increased effort more in individuals with lower working memory and lower baseline effort, also primarily at low to intermediate expected values of reward. In contrast, d-amphetamine had no significant effect on reward learning. These results have implications for treatment of neuropsychiatric disorders, which may be characterized by multiple underlying reward dysfunctions.

Similar content being viewed by others

Introduction

In order to thrive, organisms must choose which rewards to pursue, and must learn from the results of those choices. Difficulties with these reward-related functions are present in psychiatric disorders including depression, schizophrenia, and addiction [1,2,3]. Although mesocortical dopamine (DA) is a key neurobiological substrate for reward-based decision-making and learning, it remains unclear how these functions are affected by DAergic medications.

Medications that increase DA increase willingness to exert effort for reward [4, 5] and reward learning [6, 7], while DA depletion techniques decrease exertion of effort for reward [8, 9] and impair reward learning [6, 7, 10, 11]. However, preclinical studies suggest that these processes depend on different DA signals. Specifically, local, non-bursting release of DA from nucleus accumbens synapses appear to influence effort for rewards, while burst-firing of DA neurons encodes reward learning [12, 13]. Moreover, effort may be more closely tied to activity at more sensitive D2 receptors, while learning may be dependent on less sensitive D1 receptors [14,15,16,17]. This suggests that the same DAergic manipulation could exert differing effects on effort-related decision-making and reward learning.

Consistent with this idea, overexpression of nucleus accumbens D2 receptors and enhancing extracellular DA via DA transporter knockdown increases willingness to exert effort for reward [18, 19], but not reward learning in mice [18]. While no within-subject comparisons have been conducted in humans to date, similar drugs and doses across studies have affected one function [5, 20], but not the other [21,22,23]. The dose-response curve for DA and reward functioning is believed to follow an inverted-U, such that there is an “optimal” level of DA, with both overly low and overly high levels of DA impairing reward functioning [24, 25]. One study found that a moderate dose of d-amphetamine increased the willingness of rats to exert effort for reward, while a high dose decreased willingness to exert effort [26]. This work suggests that reward-related processes may have different dose-response relationships with DA and different “optimal” levels of DA.

In the current work, we examined the within-subject effects of two doses (10/20 mg) of d-amphetamine on an effort-related decision-making task in which participants choose how much effort to exert for rewards with a given expected value, and on a reward learning task which examines the development of a response bias for more highly rewarded options. We selected d-amphetamine because, although it is not DA-specific, it has been shown to improve reward learning on a probabilistic reward task in rodents [27] and affect effort-based decision-making in humans [4] and rodents [26]. Further, tyrosine depletion studies suggest that although d-amphetamine affects other neurotransmitter systems, its effects on reward functioning seem to be dopamine-mediated [28].

In addition to testing multiple doses of d-amphetamine, we also examined individual differences that may interact with d-amphetamine to affect effort-based decision-making and reward learning. First, based on evidence from rodent studies suggesting that baseline willingness to exert effort moderates the effects of stimulants on effort [29], we examined whether baseline willingness to exert effort moderated the effects of d-amphetamine. Furthermore, enhancing dopamine has been shown to differentially affect reward learning according to working memory capacity [22, 30,31,32]. Therefore, we also evaluated whether working memory moderates the effects of d-amphetamine.

Materials and methods

Participants

Thirty healthy adults were recruited via flyers, internet ads, and a database of participants in previous studies. Eligibility screening consisted of a physical exam, electrocardiogram, the Structured Clinical Interview for DSM-5 performed by Master’s-level trained counselors, and drug use history questionnaire. Potential participants were excluded for: 1. Contraindication to amphetamine (high blood pressure, abnormal ECG, pregnancy, or breastfeeding); 2. Conditions requiring regular medication, or regular use of a supplement with hazardous interactions with d-amphetamine (e.g., St. John’s wort); 3. Previous adverse reaction to d-amphetamine; 4. No prior experience with psychoactive substances (this addresses human subjects concerns with administration of psychoactive substances to completely substance-naïve participants; psychoactive substance was broadly defined [e.g. alcohol], prior experience with stimulants or illicit drugs was not required); 5. Current DSM-V diagnosis besides mild Substance Use Disorder (≤ 3 symptoms); 6. Lifetime history of moderate to severe Substance Use Disorder (≥ 4 symptoms), mania, or psychosis; 7. BMI below 19 or above 29; 8. Less than a high-school education or not fluent in English; 9. Smoking more than 10 cigarettes per week.

Participants were instructed to abstain from using drugs 48 h prior to each session (confirmed via Reditest urine drug screen for cocaine, amphetamines, methamphetamine, tetrahydrocannabinol, opioids, and benzodiazepines), avoid consuming alcohol for 24 h prior to each session (confirmed via breath testing) and get adequate sleep, fast for 9 h prior to sessions, and maintain their typical consumption of nicotine and caffeine (verified by self-report upon arrival). As menstrual phase can affect responses to d-amphetamine [33], women were scheduled during the follicular phase with the exception of women on hormonal birth control or with extreme cycle irregularity. The University of Texas Health Science Center at Houston IRB approved this study and all participants gave written informed consent in accordance with the Declaration of Helsinki. See Table 1 for sample characteristics.

Measures

Manipulation checks

We used the Elation sub-scale of the Profile of Mood States (POMS) to assess typical mood effects of the drug [34, 35]. Participants were also administered the Drug Effects Questionnaire (DEQ) [36], which contains an item assessing the extent to which participants felt a drug. Mean arterial pressure (MAP) was used to track cardiovascular effects of the drug.

The effort expenditure for rewards task (EEfRT)

The EEfRT is a measure of effort-based decision-making that has been described thoroughly elsewhere [37]. Briefly, each trial presents the participant with a choice between an “easy” keypress task worth $1.00 and a “hard” keypress task worth a variable amount of reward ($1.24–$4.21). Participants are shown the amount the hard task is worth and the probability of winning (88%, 50%, and 12%) before making each choice. The primary outcome variable was choice of the hard task. Key press speed was measured to control for psychomotor effects of d-amphetamine. Two “win” trials were randomly chosen for payout.

The probabilistic reward task (PRT)

The PRT was selected because it has been widely used in human studies, has translational value, and because prior work in rodents and humans indicates it is sensitive to dopamine manipulations [27]. On each trial of the PRT, participants are presented with a cartoon face and must select the length (short or long) of a feature. The face is presented for 100 ms, making the decision difficult. Multiple versions with different features (mouth vs. nose) were used in counterbalanced order to avoid practice effects. Following some correct responses, participants received a 5-cent monetary reward. Correct identification of one length was rewarded more frequently than the other length. Healthy adults typically develop a response bias for the more rewarded category. Reward learning was measured via a signal detection approach [38]. The primary outcome was response bias (logb), the propensity to select the more rewarded response:

Discriminability (logd), the participants’ ability to differentiate the two stimuli was also measured to control for possible perceptual/attentional improvements due to d-amphetamine:

Working memory task

Participants completed a brief validated working memory battery at baseline only that included items measuring operation span, reading span, and symmetry span [39]. The primary outcome was the “partial” working memory score, which counts all correct identifications, even in partially recalled strings.

Procedures

Participants first attended an orientation in which they practiced study tasks and completed the baseline working memory task and a baseline measure of the EEfRT. All subsequent drug study sessions began at 9 am. Following completion of measures of mood, subjective drug effects, and blood pressure, participants were administered the drug or placebo at 9:30 am. While waiting for the drug effect to reach peak, participants were allowed to watch a movie or read a book. Manipulation checks were repeated at 10 am and 11 am. Participants completed study tasks ~1.5 h after drug administration to coincide with peak drug effect. The order of tasks was randomized. Manipulation checks were completed every hour until at least 1 pm, or until effects of the drug returned to baseline, at which point they completed an End of Session Questionnaire and left the lab (~1:30 pm for most participants). Sessions were separated by at least 72 h.

Data analytic plan

A series of mixed-effects models assessed the effects of d-amphetamine on manipulation checks and EEfRT/PRT performance. All analyses were performed in R [40] using lmer and lmertest using the Satterthwaite method for degrees of freedom [41, 42]. All continuous variables were mean centered and categorical variables were contrast coded. We established our random-effects models by generating a maximal model and iteratively reducing it per Bates, Kliegl, Vasishth, and Baayen using the RePsychLing package [41, 43, 44]. Follow-up tests of significant main effects or interactions were conducted using the emmeans package [45].

Manipulation checks

Subjective (POMS: Elation and DEQ: Feel Drug) and cardiovascular effects (MAP) were modeled using a linear mixed-effects model (LMM), with fixed effects for Drug (placebo, 10 mg or 20 mg d-amphetamine), Time (pre-capsule, 30 min. post-capsule, 90 min. post-capsule, 180 min. post-capsule, 240 min. post-capsule), and their interactions.

EEfRT

Choices were modeled using a generalized linear mixed-effects model (GLMM) with a logit link function for the binomial (hard/easy) outcome. Fixed effects were Drug (placebo, 10 mg or 20 mg), Probability (12%, 50%, or 88%), Amount ($1.24–$4.21), and their interactions (note that Probability and Amount interaction is referred to as the expected value of a reward). We also included fixed effects for Trial Number (0–50) and Session (1, 2, or 3) to account for effects of fatigue and practice, consistent with prior analyses [4, 46]. For the control analyses of psychomotor speed, we first used an LMM to model key pressing speed as a function of Drug (placebo, 10 mg or 20 mg) and type of task chosen (Hard vs. Easy). Individual estimates for the linear effect of drug on tapping speed were entered as a between-subject covariate in the final model.

Significant effects of d-amphetamine on the EEfRT were followed up by fitting a series of computational models described in Cooper et al. (2019) (see Supplementary Materials for full description). Briefly, the subjective value (SV) model uses the reward (R), effort (E), and probability of each option (P) to estimate the subjective value of each option on each trial:

SV = RPh – kEFree parameter h modifies subjective value according to the probability that the reward will be received and can be interpreted as sensitivity to probability, while free parameter k reduces subjective value based on the amount of effort required, independent of probability of reward receipt. The fit of the SV model was compared with the fit of a simple model that does not use trial-wise information to guide choice (ΔBIC; see Supplementary Table S1 and Supplementary Figure S1). ΔBIC and best-fitting parameters k and h were examined using repeated-measures ANOVA with d-amphetamine dose as a within-subjects factor. First session (placebo, 10 mg, 20 mg) was included as a between-subjects factor to control for potential order effects. Due to non-normal distributions, free parameters were log-transformed prior to analysis. Greenhouse–Geisser corrected statistics are reported where sphericity was violated. The goal of these analyses was to test whether d-amphetamine affected the degree to which participants use available information to guide choice, or best-fitting model parameters (i.e. effort discounting, sensitivity to probability).

Finally, we examined whether baseline EEfRT performance or working memory moderated effects of d-amphetamine on choice by including percent of hard task choices at baseline and composite working memory scores into the final choice model as between-subjects mean-centered continuous fixed effects [47].

Two participants were excluded from all analyses involving the EEfRT, leaving twenty-eight participants. One excluded participant chose all hard trials across all sessions, and the other completed only high-value trials in order to bias payout.

PRT

PRT variables (response bias, discriminability, reaction time, and reinforcement schedule) were modeled using LMM. For response bias and discriminability, fixed effects were Drug (placebo, 10 mg or 20 mg), Block (1, 2), and their interaction. For analyses of reaction time and reinforcement schedule, Stimulus (rich, lean) was included. (See Supplementary Material for results). We examined whether baseline EEfRT performance or working memory moderated effects of d-amphetamine on response bias by including percent of hard task choices at baseline and composite working memory scores as between-subjects mean-centered fixed effects in two separate models. One participant was excluded from PRT analyses due to excessive (>20) trials with outlier reaction times.

Relationship between EEfRT, PRT, and Working Memory

We examined the relationship between performance on the EEfRT (% hard choices) and the PRT (response bias) under placebo and drug effects on these tasks via Pearson’s Correlations. Pearson’s correlations were also used to examine associations between drug effects (20mg-PL and 10mg-PL) and baseline measures of EEfRT and working memory.

Results

Manipulation checks

d-Amphetamine demonstrated typical subjective and cardiovascular effects, peaking at or near the time of the behavioral tasks (see Table S2 and Fig. S3 for full results).

Effort expenditure for rewards task

Effort

As shown in Fig. 1a, d-amphetamine increased choice of the hard task at the 20 mg dose, consistent with our previous results (linear Drug effect, B = 1.90, SE = 0.29, z = 2.24, p = 0.03). However, the effect of the drug was most evident at low to moderate expected values; linear Drug × quadratic Probability × Amount interaction, B = 1.72, SE = 0.50, z = 3.43, p < 0.001, full results in Supplementary Table S3. This likely occurred because near ceiling levels of effort were exerted in all drug conditions when both probability and reward amount were high. Figure 1b shows the effect of drug at each level of probability, graphed at representative points across the range of possible reward amounts. Including the effect of drug on keypress speeds as a covariate did not change these results.

Computational modeling

In a separate set of analyses using our computational modeling approach, we found that model fit (BIC) for the subjective value model was similar across all doses of d-amphetamine, F(2, 50) = 0.192, p = 0.826. Model fit (ΔBIC) also did not show differences across d-amphetamine doses, F(2, 50) = 0.065, p = 0.937, suggesting that d-amphetamine did not affect the degree to which participants systematically incorporated trial-wise reward and probability information when allocating effort. In a repeated-measures ANOVA model that included Parameter (h and k), representing sensitivity to probability and effort, respectively, and d-amphetamine dose, we observed a significant interaction between parameter (h or k) and d-amphetamine dose, F(1.54, 38.60) = 3.841, p = 0.040, such that parameter k (effort aversion) showed differences across levels of amphetamine, F(1.52, 38.09) = 9.265, p = 0.001, while parameter h did not, F(1.46, 36.37) = 1.529, p = 0.230. Moreover, the effort aversion parameter showed a significant linear contrast, F(1,25) = 12.064, p = 0.002, suggesting that amphetamine exhibited a dose-dependent effect on effort discounting, but did not affect discounting based on probability (Fig. 2). We additionally observed a trend-level effect on the inverse temperature parameter such that choices were more consistent under higher doses of amphetamine (see Supplementary Materials).

Moderators

Baseline effort expenditure did not moderate the overall drug effect on choice. However, baseline effort expenditure significantly interacted with drug and probability, as well as drug and reward amount. d-Amphetamine differentially increased effort in individuals with lower baseline effort at low to moderate probabilities of reward (quadratic Drug × quadratic Probability × Baseline effort interaction, B = −0.54, p = 0.26, z = −2.06, p = 0.04. Baseline effort was a continuous measure, but for purposes of interpretation, we graphed and performed post-hoc tests at −1 SD (“Low”) and +1 SD (“High”) levels of baseline effort (Fig. 3a, b). Post-hoc GLMM revealed that, for low baseline effort, 20 mg of d-amphetamine increased hard task choices at low probabilities of reward (B = 3.11, SE = 1.73, z = 2.03, p = 0.04), and both 10 mg and 20 mg of d-amphetamine increased hard task choices at medium probability (B = 2.59, SE = 0.97, z = 2.20, p = 0.04 and B = 3.22, SE = 1.71, z = 2.54, p = 0.01, respectively). Those with low baseline effort showed no significant effects of d-amphetamine at any probability level. d-Amphetamine also differentially increased effort expenditure for those with high baseline effort at low amounts of reward (linear Drug × Amount × Baseline Effort interaction, B = 0.84, SE = 0.37, z = 2.29, p = 0.02). We tested the effects of drug at representative “low” ($1.96) vs. “high” ($3.40) reward values (Fig. 3c, d). 20 mg of d-amphetamine increased choice of the hard task in low baseline effort at low reward values (B = 4.43, SE = 0.68, z = 3.12, p = 0.002), but did not increase choices of the hard task in high baseline effort at either level of reward. Together, these results suggest more effect of being “low” vs. “high” at the same low to intermediate “expected values” of reward where the drug had more effects overall (although it should be noted that the full Drug × Probability × Amount × Baseline Effort interaction did not reach significance; Supplementary Table S2).

Graphs display one standard deviation below and above the mean baseline effort for visual purposes only. In the analysis, baseline effort was used as a continuous moderator. a % hard task choices by probability in low baseline effort. b % hard task choices by probability in high baseline effort. c % hard task choices by probability and amount in low baseline effort. d % hard choices by probability and amount in high baseline effort.

Baseline working memory did not moderate the overall effect of the drug on willingness to exert effort for reward (linear drug × Baseline Working Memory interaction, B = 0.03, SE = 0.31, z = 0.10, p = 0.91). However, baseline working memory capacity influenced the effect of the drug at low to intermediate values of expected reward (linear Drug × linear Probability × Amount × Baseline Working Memory interaction, B = 1.76, SE = 0.76, z = 2.31, p = 0.02; quadratic Drug × quadratic Probability × Amount × Baseline Working Memory interaction, B = 0.98, SE = 0.47, z = 2.09, p = 0.04). For purposes of interpretation, we graphed and performed post-hoc tests at −1 SD (“Low Working Memory”) and +1 SD (“High Working Memory”) levels of baseline working memory, and at low ($1.96) and high ($3.40) levels of reward (Fig. 4a, b). Both doses of d-amphetamine increased hard task choices in individuals with low working memory across several low to intermediate points on the expected value spectrum, but only increased hard task choices in individuals high in working memory when rewards had low probability and low amounts, or high probability but low amounts. In sum, individuals with lower baseline working memory showed effects of the drug across a greater range of expected values of reward (see Supplementary Table S3).

Probabilistic reward task

Response bias

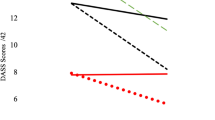

Participants developed a bias for the more highly rewarded stimulus (intercept B = 0.09, SE = 0.01, t = 6.80, p < 0.001). Response bias increased from block 1 to block 2, consistent with a reward learning process (main effect of block, B = 0.04, SE = 0.02, t = 2.61, p = 0.01). There were also significant session (practice) effects such that response bias increased from session 1 to session 2 and went back down in session 3 (quadratic effect of session, B = 0.08, SE = 0.03, t = 3.03, p < 0.01). However, d-amphetamine did not affect the overall strength of the response bias and did not change the rate at which the response bias developed (Supplementary Fig. S4 and Table S4).

Moderators

Response bias was not moderated by baseline performance on the EEfRT or overall working memory (Supplementary Table S4).

Relationships between motivation, learning and baseline measures

There was no relationship between hard choices on the EEfRT and response bias on the PRT during the placebo session (r = 0.071, p = 0.726). There was also no significant relationship between the effect of 10 mg of d-amphetamine (r = 0.312, p = 0.113) or 20 mg of d-amphetamine (r = 0.027, p = 0.895), on the EEfRT and PRT, suggesting at least partially separable processes. There was no significant relationship between baseline effort for reward and baseline working memory (r = 0.10, p = 0.61).

Discussion

The current study aimed to establish a dose-response curve of d-amphetamine on two distinct reward functions and test whether baseline reward motivation and working memory capacity moderated this relationship. Therapeutic doses of d-amphetamine increased overall willingness to exert effort for reward in a dose-dependent manner. This effect was particularly evident when reward magnitudes were small and/or probability of reward was low to moderate. However, d-amphetamine did not significantly affect reward learning, nor did baseline measures of reward motivation and working memory capacity moderate the effect of DA enhancement on reward learning.

Our results are congruent with robust evidence demonstrating that DA enhancement increases willingness to exert effort for a reward in rodents [48] and healthy humans [4, 5]. Further, because d-amphetamine increases extracellular DA by inhibiting DA transport, these results extend findings from preclinical research that demonstrates that DA transporter inhibitors increase willingness to exert effort in rodent models of effort-based decision-making [49,50,51,52]. These findings also closely replicate prior work in which the effect of 20 mg of d-amphetamine was particularly evident at low levels of reward probability, while here we saw a more complex interaction indicating that d-amphetamine was particularly effective at low to moderate levels of expected value, which incorporates both probability and amount information. The larger sample collected here may have enabled us to detect this more complex interaction [4, 53].

To further investigate the effect of amphetamine on allocation of effort for rewards, we also used a recently developed computational modeling approach. Our analyses revealed that enhancing DA affected effort discounting and not sensitivity to probability. This suggests that the observed interactions between amphetamine and probability may be driven primarily by expected values in the low to moderate probability levels that are more sensitive to individual differences in the effects of amphetamine on effort discounting, rather than a direct effect of amphetamine on probabilistic discounting. In addition, we found that amphetamine did not alter the strategies that individuals employed when making effort-based decisions. Finally, we note that effort discounting measured by this task only captures an effort/reward tradeoff, and additional work will be needed to distinguish decreased sensitivity to effort costs from increased sensitivity to reward (e.g. Westbrook et al. [53]).

Our findings also indicate that d-amphetamine boosted willingness to exert effort for reward more in individuals with lower baseline reward motivation and lower working memory performance. This effect was evident in the conditions where the effect of d-amphetamine was strongest, namely when the reward amount was low, and/or the probability of the reward was low to moderate. While baseline willingness to exert effort and working memory capacity do not exactly correspond to baseline DA functioning [31, 32, 54,55,56,57,58], these findings are consistent with the inverted-U hypothesis of DA and reward functioning, and suggest these baseline measures may be useful for tailoring DAergic treatments to individuals.

In contrast to our results with effort-related decision-making, we did not find any effect of d-amphetamine on response bias, nor did baseline measures modulate the effect of drug on response bias. This is consistent with the hypothesis that higher levels of DA stimulation might be needed to alter reward learning. Doses that increased effort in rats were in the 0.125 to 0.25 mg/kg range, with 0.5 mg/kg actually decreasing effort [26], while reward learning in rats was increased at a dose of .5 mg/kg [27]. This suggests that future research would benefit from investigating the effect of larger doses of d-amphetamine on human reward learning. An alternative explanation might be that drugs that act via auto receptors have a stronger effect on learning compared to reuptake inhibitors like d-amphetamine. The drug manipulation studies in healthy adults that have found an effect on reward learning mostly administered D2-selective agents, e.g., cabergoline and haloperidol [30, 59,60,61]. It is possible that the broad-spectrum effects of d-amphetamine may be more important when weighing decisions among complex options (e.g., in the EEfRT) by engaging cortical DA signaling, compared to D2 drugs, which may have greater effects in the striatum [30, 62, 63]. Direct comparison of these drugs to d-amphetamine in the same sample would be valuable.

Limitations

The primary limitation of this study is lack of neurochemical specificity in our DA manipulation and the indirect nature of the relationship between our baseline measures and DA baseline levels. d-Amphetamine also has noradrenergic and serotonergic effects, which have been linked to both reward processing and motivation [64,65,66,67,68]. Studies in rhesus monkeys suggest that norepinephrine is implicated in the valuation of effort costs and choice consistency, rather than willingness to exert effort per se [64, 65]. This is consistent with rodent studies in which administration of norepinephrine transport inhibitors failed to alter effortful responding [32, 49]. Further, while the role of serotonin in effort-based decision-making is limited, studies in both humans and rodents suggest a critical role for serotonin in reward learning [67,68,69]. Thus, future studies should consider using more specific pharmacological manipulations of DA in combination with PET to examine the role of baseline DA more directly. Second, it is unclear how different DA signaling dynamics may relate to these results. In addition, we were unable to explore the temporal dynamics of the evaluation of rewards and their associated costs. While our results would indicate that d-amphetamine affects evaluation of effort costs more than the integration of reward feedback into future choices (i.e., reward learning), it is unclear whether d-amphetamine affects decisions at the time of option evaluation or during the choice itself. Studies that utilize imaging with paradigms that sequentially measure multiple aspects of reward processing may be particularly productive for investigating the dynamics of DA in human reward motivation and learning.

Conclusions

In summary, the present study establishes that, with d-amphetamine, effort for reward may be more amenable to intervention than reward learning. This study also provides novel evidence linking individual differences in reward motivation and working memory to DA stimulant effects on effort for reward. This is a crucial step in establishing dose-response curves in reward processing and validating human models of the role of DA in reward. Establishing dose-response curves of therapeutic medications and identifying potential individual differences that may predict response is also critical for understanding and evaluating treatments for neuropsychiatric disorders that involve dysfunctional reward processing.

Funding and disclosure

The authors note that this was work was funded in part by the National Institutes of Mental Health R00MH102355 and R01MH108605 to MTT and F32MH115692 to JAC. It was also supported in part by the National Institute on Drug Abuse K08DA040006 to MCW and F32DA048542 to HES. Finally, this project was also supported in part by a fellowship from “la Caixa” Foundation (ID 100010434) LCF/BQ/DI19/11730047 to PLG. In the past 3 years, MTT has served as a paid consultant to Avanir Pharmaceuticals, and Blackthorn Therapeutics. MTT is a co-inventor of the EEfRT, which was used in this study. Emory University and Vanderbilt University licensed this software to BlackThorn Therapeutics. Under the IP Policies of both universities, MTT receives licensing fees and royalties from BlackThorn Therapeutics. In addition, MTT has a paid consulting relationship with BlackThorn. The terms of these arrangements have been reviewed and approved by Emory University in accordance with its conflict of interest policies, and no funding from these entities supported the current project. The authors declare no competing interests.

References

Treadway MT, Zald DH. Reconsidering anhedonia in depression: Lessons from translational neuroscience. Neurosci Biobehav Rev. 2011;35:537–555.

Davis KL, Kahn RS, Ko G, Davidson M. Dopamine in schizophrenia: a review and reconceptualization. Am J Psychiatry. 1991;148:1474–1486.

Volkow ND, Fowler JS, Wang GJ, Swanson JM, Telang F. Dopamine in drug abuse and addiction: results of imaging studies and treatment implications. Arch Neurol. 2007;64:1575–1579.

Wardle MC, Treadway MT, Mayo LM, Zald DH, de Wit H. Amping up effort: effects of d-amphetamine on human effort-based decision-making. J Neurosci. 2011;31:16597–16602.

Zénon A, Devesse S, Olivier E. Dopamine manipulation affects response vigor independently of opportunity cost. J Neurosci. 2016;36:9516–9525.

Pleger B, Ruff CC, Blankenburg F, Klöppel S, Driver J, Dolan RJ. Influence of dopaminergically mediated reward on somatosensory decision-making. PLoS Biol. 2009;7:e1000164.

Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045.

Cawley E, Park S, aan het Rot M, Sancton K, Benkelfat C, Young S, et al. Dopamine and light: dissecting effects on mood and motivational states in women with subsyndromal seasonal affective disorder. J Psychiatry Neurosci. 2013;38:388–397.

Venugopalan VV, Casey KF, O’Hara C, O’Loughlin J, Benkelfat C, Fellows LK, et al. Acute phenylalanine/tyrosine depletion reduces motivation to smoke cigarettes across stages of addiction. Neuropsychopharmacology. 2011;36:2469–2476.

Zirnheld PJ, Carroll CA, Kieffaber PD, O’Donnell BF, Shekhar A, Hetrick WP. Haloperidol impairs learning and error-related negativity in humans. J Cogn Neurosci. 2004;16:1098–1112.

Janssen LK, Sescousse G, Hashemi MM, Timmer MHM, Ter Huurne NP, Geurts DEM, et al. Abnormal modulation of reward versus punishment learning by a dopamine D2-receptor antagonist in pathological gamblers. Psychopharmacol (Berl). 2015;232:3345–3353.

Berke JD. What does dopamine mean? Nat Neurosci. 2018;21:787–793.

Mohebi A, Pettibone JR, Hamid AA, Wong JMT, Vinson LT, Patriarchi T, et al. Dissociable dopamine dynamics for learning and motivation. Nature. 2019;570:65–70.

Grace AA. Phasic versus tonic dopamine release and the modulation of dopamine system responsivity: a hypothesis for the etiology of schizophrenia. Neuroscience. 1991;41:1–24.

Grace AA. The tonic/phasic model of dopamine system regulation and its implications for understanding alcohol and psychostimulant craving. Addiction. 2000;95:119–128.

Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science (80-). 1997;275:1593–1599.

Niv Y, Daw ND, Joel D, Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacol (Berl). 2007;191:507–520.

Cagniard B, Balsam PD, Brunner D, Zhuang X. Mice with chronically elevated dopamine exhibit enhanced motivation, but not learning, for a food reward. Neuropsychopharmacology. 2006;31:1362–1370.

Trifilieff P, Feng B, Urizar E, Winiger V, Ward RD, Taylor KM, et al. Increasing dopamine D2 receptor expression in the adult nucleus accumbens enhances motivation. Mol Psychiatry. 2013;18:1025–1033.

Volkow ND, Wang G-J, Fowler JS, Telang F, Maynard L, Logan J, et al. Evidence that methylphenidate enhances the saliency of a mathematical task by increasing dopamine in the human brain. Am J Psychiatry. 2004;161:1173–1180.

Vo A, Seergobin KN, Morrow SA, MacDonald PA. Levodopa impairs probabilistic reversal learning in healthy young adults. Psychopharmacol (Berl). 2016;233:2753–2763.

Van Der Schaaf ME, Fallon SJ, Ter Huurne N, Buitelaar J, Cools R. Working memory capacity predicts effects of methylphenidate on reversal learning. Neuropsychopharmacology. 2013;38:2011–2018.

Swart JC, Froböse MI, Cook JL, Geurts DE, Frank MJ, Cools R, et al. Catecholaminergic challenge uncovers distinct Pavlovian and instrumental mechanisms of motivated (in)action. Elife. 2017;6:e22169.

Cools R, D’Esposito M. Inverted-U-shaped dopamine actions on human working memory and cognitive control. Biol Psychiatry. 2011;69:e113–e125.

Vaillancourt DE, Schonfeld D, Kwak Y, Bohnen NI, Seidler R. Dopamine overdose hypothesis: evidence and clinical implications. Mov Disord. 2013;28:1920–1929.

Floresco SB, Tse MTL, Ghods-Sharifi S. Dopaminergic and glutamatergic regulation of effort- and delay-based decision making. Neuropsychopharmacology. 2008;33:1966–1979.

Der-Avakian A, D’Souza MS, Pizzagalli DA, Markou A. Assessment of reward responsiveness in the response bias probabilistic reward task in rats: implications for cross-species translational research. Transl Psychiatry. 2013;3:e297.

Leyton M, aan het Rot M, Booij L, Baker GB, Young SN, Benkelfat C. Mood-elevating effects of d-amphetamine and incentive salience: the effect of acute dopamine precursor depletion. J Psychiatry Neurosci. 2007;32:129–136.

Cocker PJ, Hosking JG, Benoit J, Winstanley CA. Sensitivity to cognitive effort mediates psychostimulant effects on a novel rodent cost/benefit decision-making task. Neuropsychopharmacology. 2012;37:1825–1837.

Frank MJ, O’Reilly RC. A mechanistic account of striatal dopamine function in human cognition: psychopharmacological studies with cabergoline and haloperidol. Behav Neurosci. 2006;120:497–517.

Cools R, Gibbs SE, Miyakawa A, Jagust W, D’Esposito M. Working memory capacity predicts dopamine synthesis capacity in the human striatum. J Neurosci. 2008;28:1208–1212.

Hosking JG, Floresco SB, Winstanley CA. Dopamine antagonism decreases willingness to expend physical, but not cognitive, Effort: a comparison of two rodent cost/benefit decision-making tasks. Neuropsychopharmacology. 2015;40:1005–1015.

White TL, Justice AJH, de Wit H. Differential subjective effects of d-amphetamine by gender, hormone levels and menstrual cycle phase. Pharm Biochem Behav. 2002;73:729–741.

Johanson CE, Uhlenhuth EH. Drug preference and mood in humans: d-amphetamine. Psychopharmacol (Berl). 1980;71:275–279.

de Wit H, Griffiths RR. Testing the abuse liability of anxiolytic and hypnotic drugs in humans. Drug Alcohol Depend. 1991;28:83–111.

Fischman MW, Foltin RW. Utility of subjective‐effects measurements in assessing abuse liability of drugs in humans. Br J Addict 1991;86:1563–1570.

Treadway MT, Buckholtz JW, Schwartzman AN, Lambert WE, Zald DH. Worth the ‘EEfRT’? The effort expenditure for rewards task as an objective measure of motivation and anhedonia. PLoS One. 2009;4:e6598.

Pizzagalli DA, Jahn AL, O’Shea JP. Toward an objective characterization of an anhedonic phenotype: a signal-detection approach. Biol Psychiatry. 2005;57:319–327.

Oswald FL, McAbee ST, Redick TS, Hambrick DZ. The development of a short domain-general measure of working memory capacity. Behav Res Methods. 2014;47:1343–1355.

R Core Team. R: A language and environment for statistical computing. 2019.

Bates D, Mächler M, Bolker BM, Walker SC. Fitting linear mixed-effects models using lme4. J Stat Softw. 2015;67:arXiv1406-5823.

Kuznetsova A, Brockhoff PB, Christensen RHB. lmerTest package: tests in linear mixed effects models. J Stat Softw. 2017;82.

Matuschek H, Kliegl R, Vasishth S, Baayen H, Bates D. Balancing Type I error and power in linear mixed models. J Mem Lang 2017;94:305–315.

Baayen H, Bates D, Kliegl R, Vasishth S. RePsychLing: data sets from psychology and linguistics experiments. R Packag Version 004. 2015.

Lenth R. emmeans: Estimated marginal means, aka least-squares means. R Packag Version 14. 2019:3.

Wardle MC, Treadway MT, de Wit H. Caffeine increases psychomotor performance on the effort expenditure for rewards task. Pharm Biochem Behav 2012;102:526–531.

Cohen J, Cohen P, West SG, Aiken LS. Applied multiple regression/correlation analysis for the behavioral sciences. Jacob Cohen, Patricia Cohen, Stephen G. West, Leona S. Aiken - Google Books. 3rd ed. Mahwah: Lawrence Erlbaum Associates, Inc. 2003.

Salamone JD, Correa M, Nunes EJ, Randall PA, Pardo M. The behavioral pharmacology of effort-related choice behavior: dopamine, adenosine and beyond. J Exp Anal Behav. 2012;97:125–146.

Yohn SE, Errante EE, Rosenbloom-Snow A, Somerville M, Rowland M, Tokarski K, et al. Blockade of uptake for dopamine, but not norepinephrine or 5-HT, increases selection of high effort instrumental activity: Implications for treatment of effort-related motivational symptoms in psychopathology. Neuropharmacology. 2016;109:270–280.

Yohn SE, Gogoj A, Haque A, Lopez-Cruz L, Haley A, Huxley P, et al. Evaluation of the effort-related motivational effects of the novel dopamine uptake inhibitor PRX-14040. Pharm Biochem Behav. 2016;148:84–91.

Yohn SE, Lopez-Cruz L, Hutson PH, Correa M, Salamone JD. Effects of lisdexamfetamine and s-citalopram, alone and in combination, on effort-related choice behavior in the rat. Psychopharmacol (Berl). 2016;233:949–960.

Rotolo RA, Dragacevic V, Kalaba P, Urban E, Zehl M, Roller A, et al. The novel atypical dopamine uptake inhibitor (S)-CE-123 partially reverses the effort-related effects of the dopamine depleting agent tetrabenazine and increases progressive ratio responding. Front Pharmacol. 2019;10:682.

Westbrook A, van den Bosch R, Määttä JI, Hofmans L, Papadopetraki D, Cools R, et al. Dopamine promotes cognitive effort by biasing the benefits versus costs of cognitive work. Science (80-). 2020;367:1362–1366.

Cools R, Esposito MD. Inverted-U shaped dopamine actions on human working memory and cognitive control. 2011.2011. https://doi.org/10.1016/j.biopsych.2011.03.028.

Vijayraghavan S, Wang M, Birnbaum SG, Williams GV, Arnsten AFT. Inverted-U dopamine D1 receptor actions on prefrontal neurons engaged in working memory. Nat Neurosci. 2007;10:376–384.

Williams GV, Goldman-Rakic PS. Modulation of memory fields by dopamine D1 receptors in prefrontal cortex. Nature. 1995;376:572–575.

Bäckman L, Nyberg L, Soveri A, Johansson J, Andersson M, Dahlin E, et al. Effects of working-memory training on striatal dopamine release. Science (80-). 2011;333:718.

Treadway MT, Buckholtz JW, Cowan RL, Woodward ND, Li R, Ansari MS, et al. Dopaminergic mechanisms of individual differences in human effort-based decision-making. J Neurosci. 2012;32:6170–6176.

Cools R, Frank MJ, Gibbs SE, Miyakawa A, Jagust W, D’Esposito M. Striatal dopamine predicts outcome-specific reversal learning and its sensitivity to dopaminergic drug administration. J Neurosci 2009;29:1538–1543.

Cohen MX, Krohn-Grimberghe A, Elger CE, Weber B. Dopamine gene predicts the brain’s response to dopaminergic drug. Eur J Neurosci. 2007;26:3652–3660.

Mueller EM, Burgdorf C, Chavanon M-LL, Schweiger D, Wacker J, Stemmler G. Dopamine modulates frontomedial failure processing of agentic introverts versus extraverts in incentive contexts. Cogn Affect Behav Neurosci. 2014;14:756–768.

Camps M, Kelly PH, Palacios JM. Autoradiographic localization of dopamine D1 and D2 receptors in the brain of several mammalian species. J Neural Transm. 1990;80:105–127.

Honey GD, Suckling J, Zelaya F, Long C, Routledge C, Jackson S, et al. Dopaminergic drug effects on physiological connectivity in a human cortico-striato-thalamic system. Brain 2003;126:1767–1781.

Bouret S, Ravel S, Richmond BJ. Complementary neural correlates of motivation in dopaminergic and noradrenergic neurons of monkeys. Front Behav Neurosci. 2012;6:40.

Jahn CI, Gilardeau S, Varazzani C, Blain B, Sallet J, Walton ME, et al. Dual contributions of noradrenaline to behavioural flexibility and motivation. Psychopharmacol (Berl). 2018;235:2687–2702.

Seymour B, Daw ND, Roiser JP, Dayan P, Dolan R. Serotonin selectively modulates reward value in human decision-making. J Neurosci. 2012;32:5833–5842.

Izquierdo A, Carlos K, Ostrander S, Rodriguez D, McCall-Craddolph A, Yagnik G, et al. Impaired reward learning and intact motivation after serotonin depletion in rats. Behav Brain Res. 2012;233:494–499.

Chamberlain SR, Müller U, Blackwell AD, Clark L, Robbins TW, Sahakian BJ. Neurochemical modulation of response inhibition and probabilistic learning in humans. Science (80-). 2006;311:861–863.

Morean ME, De Wit H, King AC, Sofuoglu M, Rueger SY, O’malley SS. The drug effects questionnaire: psychometric support across three drug types. Psychopharmacol (Berl). 2013;227:177–192.

Acknowledgements

The authors would like to thank Brittney Chapa and Onyee Ibekwe for their help in collecting the data.

Author information

Authors and Affiliations

Contributions

MCW and MTT designed the study. HES, PLG, and SDL collected the data. HES, JAC, VML, PLG, MTT, and MCW contributed to data analysis and interpretation. HES, JAC, PLG, JKH, CN, SDL, MTT, and MCW drafted the manuscript. All authors revised and provided final approval of the manuscript.

Corresponding authors

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Soder, H.E., Cooper, J.A., Lopez-Gamundi, P. et al. Dose-response effects of d-amphetamine on effort-based decision-making and reinforcement learning. Neuropsychopharmacol. 46, 1078–1085 (2021). https://doi.org/10.1038/s41386-020-0779-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41386-020-0779-8

This article is cited by

-

Abnormal functional connectivity of the nucleus accumbens subregions mediates the association between anhedonia and major depressive disorder

BMC Psychiatry (2023)

-

Pharmacological characterisation of the effort for reward task as a measure of motivation for reward in male mice

Psychopharmacology (2023)

-

Functional connectivity in reward circuitry and symptoms of anhedonia as therapeutic targets in depression with high inflammation: evidence from a dopamine challenge study

Molecular Psychiatry (2022)

-

Shared and distinct reward neural mechanisms among patients with schizophrenia, major depressive disorder, and bipolar disorder: an effort-based functional imaging study

European Archives of Psychiatry and Clinical Neuroscience (2022)

-

Delta-9-tetrahydrocannabinol reduces willingness to exert effort in women

Psychopharmacology (2022)