Abstract

When it comes to monitoring of huge structures, main issues are limited time, high costs and how to deal with the big amount of data. In order to reduce and manage them, respectively, methods from the field of optimal design of experiments are useful and supportive. Having optimal experimental designs at hand before conducting any measurements is leading to a highly informative measurement concept, where the sensor positions are optimized according to minimal errors in the structures’ models. For the reduction of computational time a combined approach using Fisher Information Matrix and mean-squared error in a two-step procedure is proposed under the consideration of different error types. The error descriptions contain random/aleatoric and systematic/epistemic portions. Applying this combined approach on a finite element model using artificial acceleration time measurement data with artificially added errors leads to the optimized sensor positions. These findings are compared to results from laboratory experiments on the modeled structure, which is a tower-like structure represented by a hollow pipe as the cantilever beam. Conclusively, the combined approach is leading to a sound experimental design that leads to a good estimate of the structure’s behavior and model parameters without the need of preliminary measurements for model updating.

Similar content being viewed by others

1 Introduction

For assessing civil engineering structures, methods for designing optimal experiments (DoE) increasingly come to the fore. It often takes a lot of effort, especially in the case of tall structures, to equip the structure with the measurement devices, the associated cables and controllers. This makes it inevitable to develop a proper design for the measurement setup.

In other fields like biological or chemical engineering many approaches for the DoE are well known and applied [3, 12,13,14]. Nowadays, measurement concepts in civil engineering mainly arise from the engineer’s experience and the available knowledge about the structure. Usually, an equal distribution of the sensors is used when there is no further information of the structure available [5, 6, 37]. Others make use of the modal information, such as natural frequency and modeshape, to gain the optimal sensor placement [18, 28]. To improve this practice, it is useful to apply methods of DoE in order to place sensors at significant positions and also to possibly reduce the amount of sensors, which is accompanied with reducing costs for the measurements [18, 36].

In general, experiments on civil structures can be divided into two cases. On the one hand, field tests are conducted on existing structures in their environment. These tests are often referred to as short- or long-term monitoring tests. The surrounding conditions cannot be controlled, but only monitored or evaluated by e. g. specimen tests of the soil or wind measurements. On the other hand, specimens, small structural parts or down-scaled full structures can be observed under controlled conditions in the laboratory.

Inherent to both cases are measurement errors which can be random (aleatoric) and/or systematic (epistemic). For a proper DoE it is important to consider the measurement errors and noise in order to obtain the optimal sensor setup. Comprehensive overviews on measurement errors, their determination and principle calculation are given in [8, 15, 31].

Concerning optimal design of experiments, many methodologies have been developed, and starting from full-factorial designs many more followed [1, 9, 10, 19, 26]. For instance, the Fisher Information Matrix (FIM) is used as an estimate of the covariance matrix. When applying any optimality criterion from literature, optimal designs can be found, e.g. [2, 33] and [35]. Further on, in [3, 12, 14, 24] an approach is used, where the mean-squared error (MSE) between an estimate and the true function is calculated to obtain the optimal design. Comparisons between different optimality criteria for the FIM and also regarding the MSE can be found in literature, e.g. [3, 4, 16]. Comparing both methods, the FIM approach can only account for random errors. The noise is typically considered as a normal distribution with zero mean and a constant standard deviation. The MSE, on the contrary, can cope with both random and systematic errors. It is minimizing the difference between the noisy and the exact data. Investigations concerning different error descriptions for both approaches have been discussed in [27].

Another proposed criterion in literature for DoE is the information entropy, which is a measure of the uncertainty of the estimate of the model parameters [22, 23]. Many other approaches, e. g. optimizing the sensor placement with a two-step procedure for better predictions of the dynamic response reconstruction [34] or an iterative procedure where the Modal Assurance Criterion (MAC) value is used as the optimality criterion [7] are available.

In this paper, different error descriptions are used on one application example in order to work out similarities, differences and robustness of the different DoE methods. A novel DoE approach which is making use of the Fisher Information Matrix (FIM) and the mean-squared error (MSE) in combination is presented and compared to existing ones. The DoE is performed on a tower-like structure, where a PVC pipe serves as the test object. For the identification of the optimal experimental setup before conducting the experiment itself, artificially created measurement data from a numerical model are used here. Additionally, experiments on the pipe are made under laboratory conditions in order to verify the optimal designs.

In Sect. 2 the methods used for error description and DoE are presented. Followed by Sect. 3, where the application example is introduced both as a laboratory experiment as well as a numerical simulation. The results of the investigations are shown and compared in Sect. 4. This is followed by the discussion of the results in Sects. 5 and 6 is concluding the findings.

2 Error description and design of experiments

2.1 Error description for the synthetic data

In order to simulate experimental measurement data it is necessary to introduce an error description to artificially generated data as experimentally received data is always prone to measurement uncertainties. For the scope of this paper, both error types as mentioned in Sect. 1 are considered. Both error types are inherent in all errors that belong to one of the two types. Also, later on different levels are used, respectively. Generally, the total error in measurements \(\delta\) consists of two parts:

where \(\delta _{\mathrm {sys}, i}^{(j)}\) is the systematic (epistemic or also bias) error and \(\delta _{\mathrm {rand}, i}^{(j)}\) represents the random (aleatoric) error for each timestep i from one to the maximal number of timesteps \(n^\mathrm {t}\) and per measurement location j from the first to the last one \(n^\mathrm {sens}\) [8].

Once the total error \(\delta\) is added to the true data v, fictitious measurement data u

is generated to be used in the numerical investigations. In Eq. 2y represents the spatial and t the temporal domain, respectively.

Within the given research, the random error \(\varepsilon _i^{(j)}\) is designed relative to the minimal amplitude

for every data set from all measurement locations. Further on, the random error is given by a normal distribution \(\varepsilon _i^{(j)} = N(0,\sigma ^2)\) with zero mean and variance \(\sigma ^2\). This leads to the data corrupted with noise as in

The systematic error is assumed to be relative to the exact data and the fictitious data can be derived from

where \(\beta _i^{(j)}\) denotes the factor of the relative error. A combination of the two afore-mentioned error descriptions establishes as follows:

An exemplary visualization of the error descriptions resulting from Eqs. 4 and 6 is shown in Fig. 1.

Exemplary visualization of the true solution v of acceleration time history (bold dashed line) and of acceleration data corrupted by errors: random error \(u^\mathrm {rand}\) (gray line) and random + systematic error \((u^\mathrm {sys} + u^\mathrm {rand})\) (dash with dotted line) (color figure online)

2.2 Evaluation of experimental data

The experimental data are used to obtain the optimal sensor setup considering the observed eigenfrequencies. That is why a comparison is made between the eigenfrequencies which are obtained from the setup using all positions \(f_b^\mathrm {all}\) and the sensor setups picking s positions \(f_{c}^{(s)}\). The indices b and c account for the number of eigenfrequency.

The Euclidean norm between the first numbers of measured eigenfrequencies is used to gain a quality measure for each sensor setup. Since, not necessarily, every eigenfrequency can be obtained by any setup, a preselection needs to be made. Therefore, a case discrimination corresponding to the number of missing eigenfrequencies \(n_\mathrm {miss.f}\) from these first eigenfrequencies follows as

where \(f_b^\mathrm {all}\) and \(f_{c}^{(s)}\) belong to the same mode shape, but not necessarily to the same number of eigenfrequency, because some expected eigenfrequencies might not have been detected by each setup and, therefore, the frequencies are not ranked in the same position. Accordingly, the sensor setups can be ordered and from the value of the experimental optimality criterion \({\mathcal {J}}_\mathrm {exp}\) the number of missing frequencies from the first six eigenfrequencies can be easily determined, because the number before the decimal point corresponds to \(n_\mathrm {miss.f}\).

2.3 Design of experiments on numerical model

2.3.1 DoE using Fisher Information Matrix

One way to find the optimal sensor positions is to use a modified approach on the FIM (\({\mathbf {M}}\)). From the Cramér-Rao inequality the FIM can be used as the lower bound estimate of the covariance matrix \({\mathbf {Cov}}\)[33]

While taking a numerical model that is representing the considered structure, the response functions with added noise are considered to be the experimental recordings. When the noise is regarded as a random normal distribution as described in Sect. 2.1 and the errors are spatially uncorrelated, the FIM becomes

where \({\mathbf {v}}\) are the measurement time histories and \({\varvec {\hat{\theta }}}\) are the estimated model parameters of interest.

As stated before, the FIM can only take random errors into account. If systematic errors are large the FIM approach is neglecting them and might lead to not fully optimal experimental designs. As known from literature, e.g. [2], the optimal design can be found by evaluating the FIM by any optimality criterion [32]. For further use the D-optimality

is chosen, because of its relative simplicity and the main advantage of this criterion is the independence of different scaling in the parameters, e.g. use of different units [11]. To decrease the variance of the estimator, the determinant of \({\mathbf {M^{-1}}}\) needs to be minimized.

2.3.2 DoE using mean-squared error

Often material parameters for a numerical model are completely unknown or only a certain range is known. So, the aim of an optimal design can for instance be the parameter identification to obtain a good estimate of the parameters, which can be used for further calculations. When the exact and true parameters \({\varvec{\theta }}\) are known, the mean-squared error between the estimated \({\varvec {\hat{\theta }}}\) and the true ones \({\varvec{\theta }}\)

can serve as the objective function for finding the optimal design, where \({\varvec {\hat{\theta }}}\) is depending on the sensor locations vector \({\mathbf {y}} = [y^{(1)}, y^{(2)}, \dots , y^{(j)}]\). As a first step to gain estimates of the parameters an initial guess is needed. Afterwards, the best fit between the data corrupted with noise and the data obtained with the estimated parameters is searched for by adjusting the model parameters. The cost function as found in [33] can be written as

where \(n^\mathrm {sens}\) denotes the number of sensors and \(n^\mathrm {t}\) corresponds to the number of timesteps. Additionally, the condition that the number of sensors is greater or equal to the number of unknown parameters has to be fulfilled. The afore-mentioned optimization process needs to be conducted a statistically significant amount of times k for each combination of the sensor locations vector \({\mathbf {y}}\) (setup) and for every error description. The average

is then taken to find the optimal setup and thus the optimal sensor placement per error description.

There are two different options considered in this paper to calculate the best design. First, the known true parameters \({\varvec{\theta }}\) can be used and minimizing the difference between the mean estimated parameters \({\varvec {\bar{\theta }}}\) and the exact ones \({\varvec{\theta }}\)

lead to the optimal design. Or second, as in most cases, the true solution is not known and the empirical covariance matrix is calculated with the help of the estimated parameters \({\varvec {\hat{\theta }}}\) and the mean of the estimated ones \({\varvec {\bar{\theta }}}\) as in [29]

For finding the optimal design any optimality criterion can be used. For continuity again the D-optimality criterion

which needs to be minimized is chosen as in Eq. 10.

2.3.3 Combination of Fisher Information Matrix and mean-squared error

Here, the authors propose a new method that is combining the FIM and the MSE approach by using both advantages. First of all such a combination is regarding the random as well as the systematic errors in the DoE. Second, the computation costs for MSE can be reduced drastically.

As described in the previous Sects. 2.3.1 and 2.3.2 the approach using the FIM can only take random errors into account, while the MSE approach is able to handle both kinds of error, random and systematic. Therefore, it is wise to think about the types of error that can be inherent in the measurements. When it can be assumed that only random errors occur, utilizing the FIM is sufficient, but if errors can be also systematic the method using the MSE is recommended.

Anyhow, the method using MSE is computationally much more costly than the FIM approach. Considering the FIM method, the best designs can be calculated within seconds with any normal workstation computer. On the other hand, using the MSE approach leads to significantly higher computation times even when parallelization approaches and a computational cluster are used. Main reasons are the large number of samples per sensor setup to handle it statistically and the large amount of possible sensor combinations which need to be calculated depending on the optimization algorithm.

When errors are not only random it is wise to reduce the amount of combinations for the calculation of the MSE. There are two objectives that need to be fulfilled. First, the variance is kept small with the use of the criterion based on the FIM (\({\mathcal {J}}_\mathrm {FIM}\)) and, second, a MSE approach (\({\mathcal {J}}_\mathrm {MSE}^{(1),(2)}\)) is leading to a small bias. Therefore, a three-step approach is suggested here:

-

1.

Calculation of the best designs with usage of an optimality criterion on the FIM; following a selection of a certain number of best designs, e.g. best 10 or 100, best 20% or setups with a value of the optimality criterion \({\mathcal {J}}_\mathrm {FIM}^\mathrm {select}\) which is below a set limit.

-

2.

Usage of those selected designs for calculating the MSE and resorting of the designs.

-

3.

Weighting of both approaches to find the optimal sensor design; see Eq. 17.

The optimal design is a trade-off between the minimization of the variance by using the FIM and the reduction of the bias by calculating the MSE. In order to accomplish a common optimality criterion both parts are summarized and weighted. The overall optimality criterion \({\mathcal {J}}_\text {glob}\) is then calculated by

where \(0 \le \alpha \le 1\) is the weighting factor and \(p \in \{1,2\}\) distinguishes between the two options used in Sect. 2.3.2 to calculate the objective value for the MSE approach. \({\mathcal {J}}_\text {FIM}^\mathrm {select}\) and \({\mathcal {J}}_\text {MSE}^{(p)}\) are normalized by the maximum value of the optimality criterion per error description for the chosen setups for FIM and MSE, respectively. Additionally different scaling between the two methods is circumvented by multiplying \({\mathcal {J}}_\mathrm {FIM}^\mathrm {select}/{\max ({\mathcal {J}}_\text {FIM}^\mathrm {select})}\) with the ratio of the minimum values of \({\mathcal {J}}_\mathrm {MSE}^{(p)}\) to \({\mathcal {J}}_\mathrm {FIM}^\mathrm {select}\). The globality of the criterion in Eq. 17 can not be guaranteed for either of the approaches FIM nor the MSE, but because of the general idea of finding a sensor distribution with small variance and small bias it is ensured to be leading to a setup that fulfills the general idea. As both approaches consider the variance, the obtained sensor setup should be optimal in the sense of the variance and good in the sense of the bias.

3 Application on a tower-like structure

For validation of the findings due to the DoE process a tower-like structure is used under laboratory conditions. Therefore a large amount of sensors is used in order to be able to see the differences between different setups with leaving out several sensor positions. The extensive search considering all possible sensor combinations is used as the reference. Therefore, the number of sensors and hence the amount of unknown parameters ought to be kept small to finish the computations for all sensor setups in a reasonable amount of time. Here, the parameters of interest are two Young’s moduli, therefore the number of considered sensors is chosen to be three according to general findings on optimal experimental design. The optimal number of sensors is given by \({0.5}{}\cdot {n^\mathrm {par}} (n^\mathrm {par}+1)\), where \(n^\mathrm {par}\) denotes the number of parameters to be identified. This rule guarantees that both sufficient information are provided (e.g. leading to non-singular Fisher matrices) and the efficiency is gained by keeping the number of measurements as low as possible [17].

3.1 Laboratory experiments on a cantilever beam

3.1.1 Experimental setup

A PVC pipe DN 100 (nominal diameter = \({100}\; \mathrm{mm}\)) serves as the tower-like structure with a free length \(\ell = {2.88}\; \mathrm{m}\) as shown in Fig. 2a, b. The clamped support is realized by a bucket filled with concrete. Over the length there are two distinguishable cross sections. The upper two thirds (Section A–A) consist of only one PVC pipe in the lower third (Section B–B) another sliced PVC pipe of same diameter is pulled over the inner one, which leads to different stiffnesses in the two parts.

Sketch of the experimental setup of (a) the cantilever beam (PVC pipe, DN 100) with two different sections: Section B–B in lower third (two PVC pipes around each other) and Section A–A in the upper two thirds, green box 24 discrete and equally distributed sensor positions with acceleration measurements in x- and z-direction, (b) PVC pipe with 24 measurement locations with (c) two 1D sensors on a cube and (d) 3D sensor attached with magnets (color figure online)

Moreover a thin metal stripe is attached to the inner pipe from the outside along the total height to be able to use magnetic mounting for the accelerometers. The acceleration data is recorded in both horizontal directions x and z at 24 equally distributed locations over the height with a distance of \({12}\; \mathrm{cm}\) between each one, cf. Fig. 2a.

For the measurements two different kinds of sensors, as depicted in Fig. 2c, d are used. In order to gain information in two directions, two 1D sensors are mounted on a cube (PCB 352C33, Fig. 2c) and from the 3D sensors (PCB 356A16, Fig. 2d) only two channels are used for the recordings. In total there are 48 channels from the acceleration measurements and one from the impulse hammer’s (PCB 086D20) signal that is used as excitation, to be analyzed. Unfortunately the sensor at the height of \({168}\; \mathrm{cm}\) happened to be malfunctioning and therefore the corresponding measurements are taken out of the data set. In total, signals of 47 channels are taken into consideration for the further analyzes.

3.1.2 Optimal sensor placement using experimental data

Although, the parameters of interest, the Young’s moduli, cannot be identified directly from the acceleration time history measurements, an evaluation of the numerically found DoE based on the experimentally gained data needs to be performed. Therefore, the identification of modal parameters, i. e. of the eigenfrequencies, is used to assess the quality of the obtained DoE setups.

To serve the reduction of time and costs the aim is to find the best setup with three out of the 24 applied sensor positions given the condition that the first six eigenfrequencies are of interest. Due to a sensor malfunction the two sensors at the height of \({168}\; \mathrm{cm}\) had to be taken out of consideration and so the number of possible sensor combinations is reduced to \(\left( {\begin{array}{c}23\\ 3\end{array}}\right) = 1771\). According to Sect. 2.2 and Eq. 7 the norms for all 1771 sensor combinations calculated and ordered.

3.2 Numerical representation of the cantilever beam

3.2.1 Numerical FEM model

For finding the optimal sensor positions before the structure is equipped with any measurement device a numerical FEM model of the pipe is used. In order to represent the different cross sections in the lower and the upper two thirds different Young’s moduli \(E_i\) are assigned to the respective parts. The Young’s modulus of the lower part is 2.4 times higher than the one of the upper part. The density is set to values found in literature with \(\rho = {1390}\;{\mathrm{kg}/\hbox {m}^3}\). The general setup for the numerical investigations and the values of the material properties are shown in Fig. 3.

Sketch of the cantilever beam of height \(\ell\) with two different Young’s modulus: in the lower third \(E_1\) and the upper two thirds \(E_2\), 24 discrete and equally distributed sensor positions, cross section A–A and B–B; \(\ell = {2.88}\; \mathrm{m}\), \(d_\mathrm {o} = {1.10\mathrm{e}{-}1}\; \mathrm{m}\), \(t_\mathrm {w} = {2.7{\hbox {e}{-}}3}\; \mathrm{m}\), \( E_1 = 5.28 {\text{e}} 9 \; {{\text N}/{\text m}^2} \) and \( E_2 = 2.20 {\text{e}} 9 \; {{\text N}/{\text m}^2} \) and excitation force \(q_0\)

A Bernoulli beam model

represents the PVC pipe with 24 elements assigned. This results in 8 elements belonging to the lower third and 16 elements are forming the upper two thirds. Damping is excluded from the dynamic analysis as its effect on the optimal sensor positions is considered to be negligibly small. The vibration is realized by a harmonic excitation with \(f = 0.9 \cdot f_6\) (90% of the \(6^\text {th}\) eigenfrequency) and an amplitude of \(q_0 = {5}\; \mathrm{N}\) on the top node of the structure and for the analyses only the steady-state response according to [30] is taken into account.

3.2.2 Optimal sensor placement using FEM model

The vector of the parameters of interest \({\varvec{\theta }} = (E_1,\,E_2)^\intercal\) consists of the Young’s moduli for each of the two sections (cf. Sect. 3.2.1). To consider some modeling errors that weaken the structure 80% of the true \(E_1\) and \(E_2\), respectively, are used as the initial guess for the optimization process. The experimental data are represented by acceleration time measurements in two orthogonal directions (see Fig. 3) at all 24 nodes of the beam. The measurement data were created artificially to be able to find the optimal sensor setup before conducting the experiment itself. In order to gain fictitious measurement data, artificial noise was added to the “exact” signals, where the Young’s moduli are set to the exact values. In total, nine different error type combinations and levels, which are shown in Table 1, were used.

In total \({\left( {\begin{array}{c}24\\ 3\end{array}}\right) } = 2024\) different combinations of sensor positions are possible and have to be evaluated for each of the nine error descriptions. As mentioned in Sect. 2.3.3 the FIM and MSE approaches are combined in order to save computational time. In this study it was decided that those designs from the FIM calculation are chosen where the value of the optimality criterion is less or equal to twice the value of the best design, which means

Continuing with the best selected setups, within the Nelder–Mead algorithm [21] the fictitious acceleration measurements with the initial guess of the Young’s moduli \(E_i\) were fitted \(k = 1000\) times to get a statistically sufficient amount of samples [20, 27]. This procedure was followed for the list of the chosen setups after the FIM evaluation for each of the nine error descriptions. The combined optimality criterion from Eq. 17 is then used to gain the ordered list of optimal setups. Here, the weighting factor is chosen to \(\alpha = 0.3\), because the influence of the preselection by the FIM method during the combined approach on the optimal design is already high. Choosing a lower value for \(\alpha\) gives more weight on the optimality criterion using the MSE, which has not been considered in the preselection process. The setup with the smallest value of \({\mathcal {J}}_\text {glob}^{(p)}\) is chosen to be the optimal one.

4 Results

This section is dealing with results of the experimental testing and the numerical investigations. Finally, a comparison between the obtained results is drawn.

4.1 Experimental study

For the experimental tests, one data set of acceleration time histories for each of the 46 working sensors and the force time history from the impulse hammer, which is exciting the structure horizontally in a \({-135}^{\circ }\) angle w.r.t. the z-axis as depicted in Fig. 3 on the top of the pipe, is used. The gathered data are analyzed with the MATLAB Toolbox MACEC [25] using the data-driven Stochastic Subspace Identification (SSI). The system orders are calculated from 2 to 50 in increasing steps of 2 and the number of blocks is set to 30 and the model order is 12, which is twice the number of eigenfrequencies of interest. The first six mode shapes with their corresponding eigenfrequencies that are identified by the use of all available sensor data are displayed in Table 2. As expected by slicing the outer pipe and attaching the metal stripe as well as the acceleration sensors along the resulting gap the eigenfrequencies of orthogonal mode shapes are slightly different in the two directions.

According to this procedure the gained eigenfrequencies \(f_{c}^{(3)}\) for every sensor setup using three sensor locations with three pairs of acceleration time histories as output data and the impulse hammer’s force as input data are calculated. Despite careful peak-picking and adjustment of the SSI settings, errors could have been introduced during the eigenfrequency identification process. The obtained frequencies are used for the evaluation of the best setups according to Eq. 7 for each of the setups. Ten setups, which give the best value of the experimental optimality criterion \({\mathcal {J}}_\mathrm {exp}\) are presented in Table 3.

Accordingly, the distribution of the recognized eigenfrequencies out of the first six eigenfrequencies is given in Fig. 4a, where the number of setups is plotted over the number of identified eigenfrequencies using three out of 24 sensor positions from the experimental data. In few cases only all six eigenfrequencies can be measured, but in most cases five or four of the first six eigenfrequencies are able to be identified. All sensor setups where at most half of the eigenfrequencies are identified (Eq. 7, \(n_\mathrm {miss.f} \ge 3\)) are left out of consideration for further investigations.

Additional information is shown in Fig. 4b, where the number of setups is plotted over the number of identified eigenfrequencies per orthogonal pair of the first six eigenfrequencies. From the first pair with the first and second eigenfrequency mostly only one of them is identified. The second pair with \(f_{3,4}^{(3)}\) is usually represented with both eigenfrequencies and in around \({25}{\%}\) of the cases only one of them can be measured. For the last pair it is very likely that both eigenfrequencies \(f_{5}^{(3)}\) and \(f_{6}^{(3)}\) are identifiable.

4.2 Numerical investigation

The afore-described numerical FE model from Sect. 3.2.1 is used to apply the combined approach of FIM and MSE from Sect. 2.3.3 and their individual results, respectively.

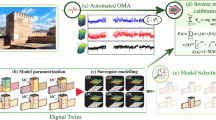

4.2.1 Results of FIM calculation

The D-optimality value is calculated for every of the 2024 possible sensor setups. The best value is found to be \(\min {\mathcal {J}}_\mathrm {FIM} = {5.1945\mathrm{{e}}-7}{}\) for the setup { 3, 4, 24} as to be seen in Table 4 and Fig. 5. Thereafter, the value of the optimality criteria needs to be \({\mathcal {J}}_\mathrm {FIM}^\mathrm {select} \le {1.0389\mathrm{e}{-}6}{}\) according to Eq. 19 for the further considered setups. This constraint leads to 82 out of 2024 chosen setups that make up around \({4}{\%}\). This saves around \({96}{\%}\) of computational time for the following MSE approach. The ten best of the further regarded setups that were preselected by the FIM approach are presented in Table 4.

4.2.2 Results of MSE calculation

For the calculation of the optimal sensor placement both approaches from Eqs. 14 and 16 are used. The optimal sensor setups per error description are given in Table 5 and Fig. 5.

4.2.3 Results of combined method

Furthermore, the results of the combined method are presented in Table 6 and in Fig. 5 according to Eq. 17. There are some differences in the optimal setups to be seen. Using the combined approach leads to other optimal setups for \(e = 4 \dots 8\) than for using Eq. 14. Also, for the comparison of the MSE approach using the covariance matrix in Eq. 16 gives different optimal designs in the case of every investigated error description except the \(9^\mathrm {th}\) one. Of course, the number of optimal setups which differ from the combined method depends on the weighting factor \(\alpha\) in Eq. 17. For \(\alpha = 0\) the combined method is equivalent to one of the MSE approaches, and on the other hand, when \(\alpha = 1\) it corresponds to the FIM approach alone.

Additionally, it is visible that the best setups for the different error descriptions become more aligned than in Fig. 5, s.t. the best setup depends less on the actual data error or error description. Calculating the experimental criterion \({\mathcal {J}}_\mathrm {exp}\) for every error description leads to similar values for the different errors. This states a robustness of the DoE approach concerning any error description.

4.3 Comparison of numerical and experimental results

Comparing the optimal sensor setups per error description between numerical and experimental investigations is not an easy task, because as stated in Sect. 4.1 in most cases not all eigenfrequencies and corresponding mode shapes can be identified by using a setup consisting out of three different sensor positions. Therefore, the introduction of Eq. 7 is very useful, because the integer part of \({\mathcal {J}}_\mathrm {exp}\) is directly corresponding to the number of unidentified frequencies for the current setup. The corresponding values for Eq. 7 are also given in Table 6 in order to be able to compare the numerical with the experimental results. The values of \({\mathcal {J}}_\mathrm {exp}\) make it obvious that for the ten best setups using the combined approach with Eq. 14 and using Eq. 16, respectively, always one of the first six eigenmodes is not identified. This incident is predicted in Fig. 4a, because only a few number of setups is able to recognize all six first eigenfrequencies of the structure and the majority is able to identify four or five of them.

Regarding the best setups per error description gained by the numerical simulation and the three best ones from the experiment an overview is given in Fig. 5. Comparing these best setups reveals differences between the proposed sensor positions. Again, the main fact is that with the experimentally gained best setups all first six eigenvalues are identified, but when looking at Fig. 4a it becomes obvious that this is only true for 20 out of the 1771 sensor setups, which is about \({1.1}{\%}\) of the setups. For all other 1751 sensor setups at least one of the first six eigenfrequencies cannot be detected. Applying the DoE approaches leads to setups, where one of the first six eigenmodes is missing.

Best sensor setup per error description e for the combined method with use of \({\mathcal {J}}_\mathrm {glob}^{(1)}\) (FIM + MSE with Eq. 14, black circle) and \({\mathcal {J}}_\mathrm {glob}^{(2)}\) (FIM + MSE with Eq. 16, black cross symbol), also for MSE with use of Eq. 14 (gray circle) and MSE with use of Eq. 16 (gray cross symbol) and best setup for FIM (gray circle with asterisk) in the first column in comparison with the experimental results for the three best obtained setups (numbers 1 to 3) (color figure online)

5 Discussion

5.1 Combined method

The combination of the two methods using FIM and MSE is having three main advantages. First of all, the FIM approach which can only deal with random errors is extended in such a way that by taking the second step with calculating the MSE for each setup also systematic errors can be taken into account. Even more important is the fact that the computing time for MSE can be reduced by preselecting the sensor setups after gaining the results of the optimality criterion used on FIM. Nevertheless, the robustness to the different error descriptions needs to be mentioned, too. In this paper it is suggested to select only setups which satisfy Eq. 19. This leads to around \({4}{\%}\) of all setups and conclusively the computation time for MSE can be reduced by \({96}{\%}\), which evens out the long computation time of the MSE approach as the main disadvantage considering parallel computing in both cases.

Nevertheless, the optimality criterion of the combined method \({\mathcal {J}}_\mathrm {glob}\) is using the optimality criteria of both approaches using FIM and MSE for finding the optimal sensor setup. As the preselection process after calculating \({\mathcal {J}}_\mathrm {FIM}\) is already very strong on selecting sensor position candidates it is recommended to use \(0 \le \alpha < 0.5\) in Eq. 17, because the emphasis is then on the value of the optimality criterion for MSE, which has not been considered before.

Comparing all found best sensor setups from the FIM and the MSE approaches alone as well as from the combined approach, they are all leading to sensor placements, where one of the first six eigenfrequencies was not found compared to the experimental results. From Fig. 5 it is obvious that the sensor setups suggested by the combined approach are different to the results from the FIM and the MSE methods alone, but as the values of the experimental optimality criterion \({\mathcal {J}}_\mathrm {exp}\) in Tables 4, 5 and 6 show, the ones of the combined approach are not worse than the ones of the extensive search. For comparability the MSE was calculated for all possible sensor position combinations; therefore, it can be used as the reference for any other regarded setup. It can be summarized that preselecting a small number of sensor setups from all possible combinations does not corrupt the final best setups in such a way that the value of the experimental optimality criterion deteriorates. Hence, the combined approach is reducing the computation time drastically by leading to similar results as the extensive search.

5.2 Comparison of numerical simulation and experimental testing

In order to compare the numerical and the experimental results one needs to keep in mind that the parameters of interest for the evaluation criteria are not the same. The numerical DoE approach is estimating the Young’s moduli and the first six eigenfrequencies ought to be identified by the experiment. Nevertheless, both kinds of parameter describe the structure from a global point of view and the results show that the DoE for identifying the Young’s moduli are confirmed to a large extend by the experimental findings.

Evaluating the experimental data shows that only a minority of sensor setups is able to identify all of the first six eigenfrequencies which are considered in this investigation (cf. Fig. 4). Actually, there are only 20 out of 1771 which correspond to \({1.1}{\%}\) of all experimental setups, where six eigenfrequencies were identifiable. Though, every optimal sensor setup per error description and optimality criterion as listed in Table 6 leads to five out of the first six eigenmodes. This result is good, because even when only five eigenfrequencies are identified from the circular cross section of the structure it is known that the mode shapes appear in pairs where to each eigenmode a nearby orthogonal eigenmode exists.

Another finding of the investigations is that both the numerical and the experimental analyses, which are both subjected to errors originating from e. g. the model, the numerical calculation itself or on the other hand measurement errors occur, lead to an optimal sensor placement where the sensors are not equally distributed over the height when three sensor positions are to be chosen. It is opposing the assumption that equal sensor distribution is good and no further investigations need to be made before conducting an experiment or monitoring of a structure.

6 Conclusions

As the results of the experiment show it is crucial to find good sensor positions in order not to miss any relevant information, e. g. eigenfrequency or Young’s moduli of the structure. Considering the optimal sensor placements, always five out of the first six regarded eigenfrequencies were identified. Additionally, using the the almost perfect rotational symmetry of the cross section, the range of the missing frequency with the according mode shape can be estimated.

With the combined approach both random and systematic errors can be included in the data, but the computation time is highly reduced compared to only applying the MSE approach. Since the selection process via the criterion used on the FIM is already very strong, it is recommended to use a small weighting factor \(\alpha < 0.5\) in Eq. 17. Comparing the results of the combined approach with the reference, which is received by the MSE approach, the values of the experimental optimality criterion are on the same level for both. Via the combined approach the computation time can be reduced drastically compared to using the MSE method only without worsening the results gained by the suggested best sensor setups. Nevertheless, the combined approach is leading to feasible sensor setups that can be used directly.

When comparing the results of the combined method and the experimental results they are not exactly the same. Neither the combined method nor the FIM approch nor the MSE approach alone suggested any of the sensor setups, where all considered first six eigenfrequencies are identified. A reasonable explanation is that due to intentionally omitting the model updating before calculating the optimal sensor placement the differences between the numerical model and the experimental one are present throughout the calculations. Another source for the differences is the error inherent in the measurement data, which might not have been well described by one of the nine error descriptions considered in this work. More research in the field of error estimation should be conducted. Additionally, there is an influence coming from the starting model as the initial guess for the Young’s moduli has a certain impact on the identification of the exact ones.

Concluding, the combined method is applicable for the optimal sensor placement, which was also shown by the small-scale experimental application. The main goal for future work is to transfer the findings to larger structures such as tall buildings, broadcasting towers or wind turbines.

References

Atkinson A, Donev A, Tobias R (2007) Optimum experimental designs, with SAS, vol 34. Oxford University Press, Oxford

Bandemer H, Bellmann A (1994) Statistische Versuchsplanung. BG Teubner

Bardow A (2006) Optimal experimental design for ill-posed problems. In: 16th European symposium on computer aided process engineering and 9th international symposium on process systems engineering. Elsevier, Amsterdam, pp 173–178

Bardow A (2008) Optimal experimental design of ill-posed problems: the meter approach. Comput Chem Eng 32(1–2):115–124

Brownjohn J, Koo KY, Basagiannis C, Alskif A, Ngonda A (2013) Vibration monitoring and condition assessment of the university of sheffield arts tower during retrofit. J Civ Struct Health Monit 3(3):153–168

Castellaro S, Perricone L, Bartolomei M, Isani S (2016) Dynamic characterization of the eiffel tower. Eng Struct 126:628–640

Chang M, Pakzad SN (2014) Optimal sensor placement for modal identification of bridge systems considering number of sensing nodes. J Bridge Eng 19(6):04014019

Coleman H, Steele W Jr (1989) Experimentation and uncertainty analysis for engineers. Wiley, New York

Fedorov VV, Hackl P (2012) Model-oriented design of experiments, vol 125. Springer Science & Business Media, New York

Fedorov VV, Leonov SL (2013) Optimal design for nonlinear response models. CRC Press, Boca Raton

Goodwin GC, Payne RL (1977) Dynamic system identification: experiment design and data analysis. Academic Press, New York

Haber E, Horesh L, Tenorio L (2008) Numerical methods for experimental design of large-scale linear ill-posed inverse problems. Inverse Probl 24(5):055012

Haber E, Horesh L, Tenorio L (2010) Numerical methods for the design of large-scale nonlinear discrete ill-posed inverse problems. Inverse Probl 26(2):025002

Horesh L, Haber E, Tenorio L (2010) Optimal experimental design for the large‐scale nonlinear ill‐posed problem of impedance imaging. In: Biegler L, Biros G, Ghattas O, Heinkenschloss M, Keyes D, Mallick B, Marzouk Y, Tenorio L, van Bloemen Waanders B, Willcox K (eds) Large‐scale inverse problems and quantification of uncertainty. https://doi.org/10.1002/9780470685853.ch13

JCGM/WG 1 (2008) GUM: evaluation of measurement data—guide to the expression of uncertainty in measurement. Technical report, working group 1 of the joint committee for guides in metrology

Lahmer T (2011) Optimal experimental design for nonlinear ill-posed problems applied to gravity dams. Inverse Probl 27(12):125005

Lahmer T, Rafajłowicz E (2017) On the optimality of harmonic excitation as input signals for the characterization of parameters in coupled piezoelectric and poroelastic problems. Mech Syst Signal Process 90:399–418

Li J, Zhang X, Xing J, Wang P, Yang Q, He C (2015) Optimal sensor placement for long-span cable-stayed bridge using a novel particle swarm optimization algorithm. J Civ Struct Health Monit 5(5):677–685

Lye L (2002) Design of experiments in civil engineering: are we still in the 1920s. In: Proceedings of the 30th annual conference of the Canadian Society for Civil Engineering, Montreal, Quebec

Montgomery DC, Runger GC (2003) Applied statistics and probability for engineers (with CD). Wiley, New York

Nelder JA, Mead R (1965) A simplex method for function minimization. Comput J 7(4):308–313

Papadimitriou C (2004) Optimal sensor placement methodology for parametric identification of structural systems. J Sound Vib 278(4):923–947

Papadimitriou C, Beck JL, Au SK (2000) Entropy-based optimal sensor location for structural model updating. J Vib Control 6(5):781–800

Papadimitriou C, Haralampidis Y, Sobczyk K (2005) Optimal experimental design in stochastic structural dynamics. Probab Eng Mech 20(1):67–78

Peeters B, De Roeck G (1999) Reference-based stochastic subspace identification for output-only modal analysis. Mech Syst Signal Process 13(6):855–878

Pronzato L, Pázman A (2013) Design of experiments in nonlinear models. Springer, Berlin

Reichert I, Olney P, Lahmer T (2019) Influence of the error description on model-based design of experiments. Eng Struct 193:100–109

Sadhu A, Goli G (2017) Blind source separation-based optimum sensor placement strategy for structures. J Civ Struct Health Monit 7(4):445–458

Schenkendorf R, Kremling A, Mangold M (2009) Optimal experimental design with the sigma point method. IET Systems Biol 3(1):10–23

Smith JO (2007) Introduction to digital filters with audio applications. W3K Publishing, Boca Raton

Tränkler HR, Reindl LM (2015) Sensortechnik: Handbuch für Praxis und Wissenschaft. Springer, Berlin

Uciński D (2005) Optimal measurement methods for distributed parameter system identification. CRC Press, Boca Raton

Uciński D, Patan M (2010) Sensor network design for the estimation of spatially distributed processes. Int J Appl Math Comput Sci 20(3):459–481

Wang J, Law S, Yang Q (2014) Sensor placement method for dynamic response reconstruction. J Sound Vib 333(9):2469–2482

Wu ZY, Zhou K, Shenton HW, Chajes MJ (2019) Development of sensor placement optimization tool and application to large-span cable-stayed bridge. J Civ Struct Health Monit 9(1):77–90

Zhang J, Maes K, De Roeck G, Reynders E, Papadimitriou C, Lombaert G (2017) Optimal sensor placement for multi-setup modal analysis of structures. J Sound Vib 401:214–232

Zuo D, Wu L, Smith DA, Morse SM (2017) Experimental and analytical study of galloping of a slender tower. Eng Struct 132:44–60

Acknowledgements

Financial support for this work was provided by the German Research Foundation (DFG), within the Research Training Group 1462. This support is gratefully acknowledged. This research was supported in part through computational resources provided on the VEGAS cluster at the Digital Bauhaus Lab in Bauhaus-Universität Weimar, Germany.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Reichert, I., Olney, P. & Lahmer, T. Combined approach for optimal sensor placement and experimental verification in the context of tower-like structures. J Civil Struct Health Monit 11, 223–234 (2021). https://doi.org/10.1007/s13349-020-00448-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13349-020-00448-7